Main Takeaways

- Kubernetes cost optimization is about aligning requests, limits, and actual usage continuously

- Manual tuning doesn’t scale, real savings come from tools that interpret usage patterns and automate resource adjustments

- The best platforms act, not just report or suggest, and are application context-aware

Kubernetes cost optimization isn’t about just cutting some dollars off of your cloud bill. It’s about regaining control.

You scaled fast. Clusters sprawled, workloads multiplied, and now your cloud bill is a black box of overprovisioned resources and unknown wastage. Finance wants answers. Engineering wants performance. And you’re stuck in the middle. Trying to rightsize a system that was never designed with cost as a first-class concern.

This post isn’t going to tell you to “monitor usage” or “turn on autoscaling.” You’ve done that. Instead, we’ll break down where Kubernetes actually leaks money in production, and what you can do about it with surgical precision.

What Kubernetes Cost Optimization Software Actually Does

At its core, Kubernetes cost optimization is the continuous process of aligning three variables:

- Resource requests: What you promise the schedule

- Resource limits: What you allow the container to use

- Actual usage: What the app actually consumes in production

When these are out of sync, quite literally pay the price. Over-request, and you waste capacity. Under-request, and you risk eviction or throttling. Set no limits, and noisy neighbors can take down your node.

This isn’t a one-time tuning task. Your workloads shift constantly, peak traffic, deploys, memory leaks, GC behavior, kernel upgrades, even marketing events. Optimizing resources is like tuning a live instrument in a crowded room.

The goal isn’t just efficiency; it’s maintaining performance without overpaying for it.

We’re not chasing perfection here. We’re trying to get close enough, continuously, and with enough margin to avoid incidents.

Where Kubernetes Cost Optimization Software Comes In

Manual optimization doesn’t scale. And this is exactly why Kubernetes cost optimization software exists. Not just to surface data, but to interpret it in context and suggest (or automatically manage) concrete actions.

Kubernetes cost optimization software typically integrates with K8s APIs or deploy agents to collect container-level telemetry:

- CPU, memory, I/O, and network usage

- Pod lifecycle events and node health

- Storage and network attachment metadata

From there, they apply statistical analysis and ML techniques to generate actionable insights:

- Time-series forecasting to predict usage trends across daily/weekly/seasonal patterns

- Percentile-based usage analysis (e.g., P95, P99) to avoid misleading averages

- Workload clustering to group pods with similar behavior. Batch jobs vs. web APIs, for instance

- Resource dependency mapping, such as CPU-to-memory correlation under load

This isn’t just academic modeling. The best tools feed this intelligence back into your resource definitions and autoscaling policies to drive real savings.

5 Capabilities Every Kubernetes Cost Optimization Software Should Have

1. Auto-Scaling Optimization

Kubernetes native autoscaling capabilities provide basic scaling functionality, but cost optimization software enhances these mechanisms with intelligence and context awareness. Advanced platforms integrate with Horizontal Pod Autoscaler (HPA), Vertical Pod Autoscaler (VPA), and Cluster Autoscaler to improve their effectiveness.

Key enhancements include:

- Predictive Scaling: Anticipating demand changes before they occur

- Multi-metric Optimization: Considering cost alongside performance metrics

- Workload-aware Scaling: Applying different scaling strategies based on application characteristics

- Integration with Spot Instances: Optimizing use of lower-cost compute options

2. Monitoring and Reporting

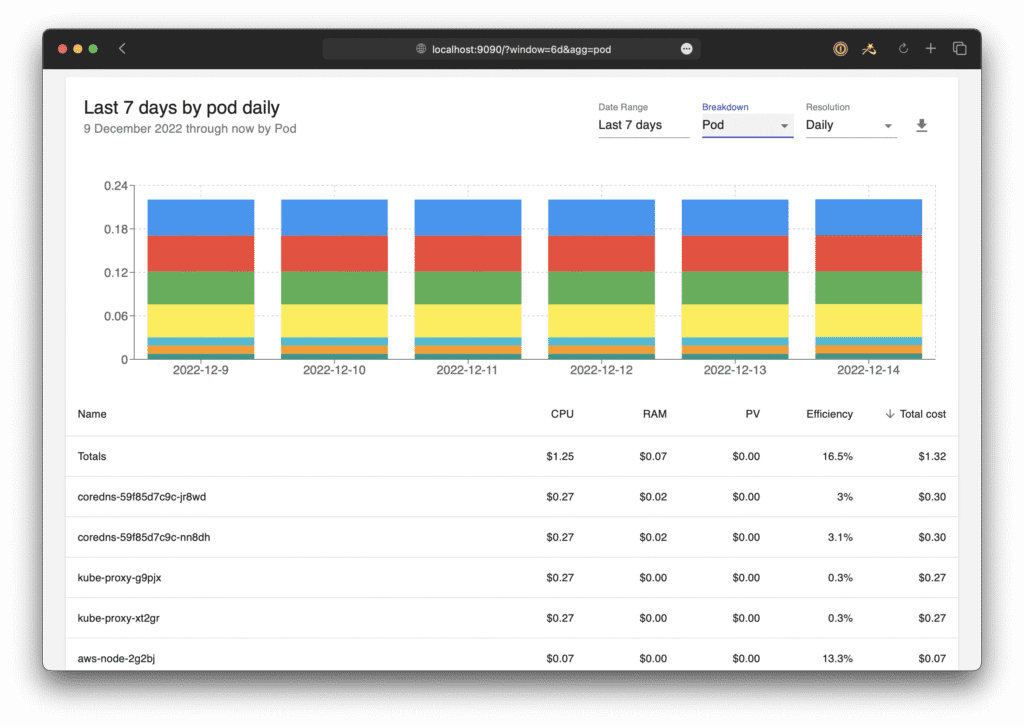

Comprehensive monitoring capabilities provide the visibility necessary for effective cost management. Modern software offers real-time dashboards and detailed reporting across multiple dimensions:

- Resource Utilization Metrics: Track CPU, memory, storage, and network usage across clusters, namespaces, and individual pods.

- Cost Attribution: Detailed breakdowns by team, project, application, or business unit to enable accurate chargeback and showback models.

- Trend Analysis: Historical cost data and usage patterns to identify opportunities for optimization and budget planning.

- Performance Correlation: Understanding the relationship between resource allocation and application performance to optimize without compromising user experience.

3. Idle Resource Detection

One of the most significant sources of waste in Kubernetes environments is idle or underutilized resources. Cost optimization software implements sophisticated detection mechanisms to identify:

- Unused Persistent Volumes: Storage allocated but not actively used by applications

- Idle Pods: Containers consuming resources without performing useful work

- Orphaned Resources: Load balancers, ingress controllers, and other infrastructure components no longer needed

- Development Environment Waste: Non-production clusters running outside of business hours

Automated detection helps organizations quickly identify and eliminate waste, often resulting in immediate cost savings of 20-40%.

4. Chargeback and Showback

Modern cost optimization software provides sophisticated cost allocation capabilities that enable accurate chargeback and showback models. These features help organizations:

- Implement Fair Cost Distribution: Accurately allocate shared infrastructure costs across teams and projects based on actual usage.

- Drive Cost Awareness: Provide visibility into the true cost of applications and services, encouraging responsible resource usage.

- Support Budget Planning: Historical cost data and usage patterns inform more accurate budget forecasting and planning.

- Enable Cost Center Management: Track costs by business unit, project, or cost center for better financial management.

5. Budget Alerts and Governance

Proactive cost management requires systems that can identify and respond to cost anomalies before they impact budgets. Modern software provides:

- Real-time Alerts: Notifications when spending exceeds predefined thresholds

- Anomaly Detection: Machine learning algorithms that identify unusual spending patterns

- Approval Workflows: Require approval for resource changes that impact costs

- Policy Enforcement: Automatic enforcement of organizational resource policies

Notable Kubernetes Cost Optimization Software: 6 Platforms for 2025

1. ScaleOps

ScaleOps provides production-grade automation for Kubernetes resource optimization, focusing on real-time, application context-aware optimization that runs entirely within your infrastructure. The platform automatically adjusts pod resource requests based on actual usage while maintaining application performance.

Key differentiators:

- Self-hosted Architecture: Complete control over optimization logic and data

- Production Focus: Designed specifically for critical production workloads

- Context-aware Optimization: Considers application characteristics and business requirements

- Seamless Integration: Works with existing Kubernetes tools and workflows

Organizations implementing ScaleOps typically see cost reductions of up to 80% while improving application reliability and performance.

2. OpenCost

OpenCost offers open-source cost monitoring and optimization for Kubernetes, providing transparency and flexibility without vendor lock-in. The platform provides detailed cost visibility and basic optimization recommendations.

Features include:

- Open Source: Community-driven development and transparent operation

- Real-time Monitoring: Continuous cost tracking across clusters and namespaces

- Flexible Deployment: Can be customized for specific organizational needs

- Integration Capabilities: Works with existing monitoring and alerting systems

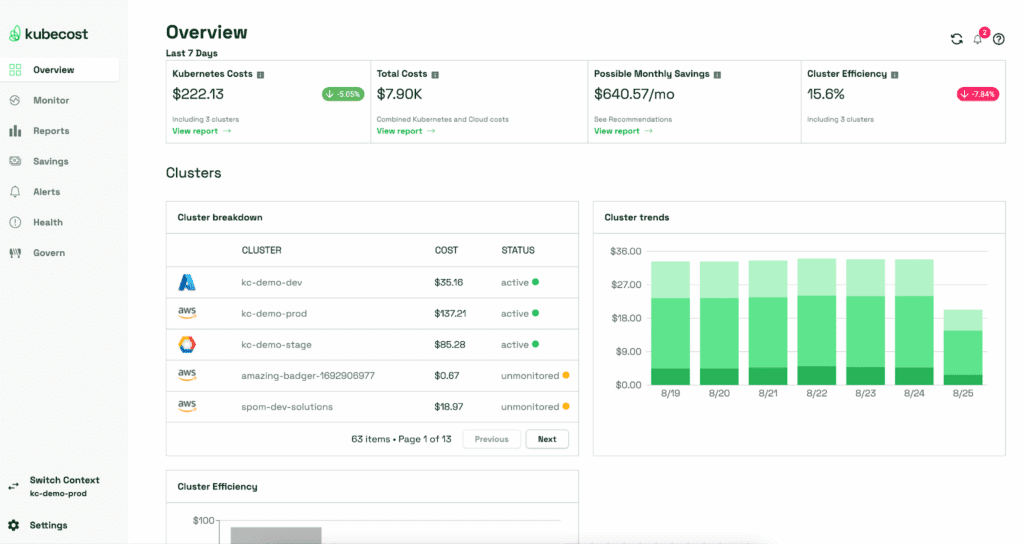

3. Kubecost

Kubecost provides comprehensive cost monitoring and optimization for Kubernetes environments, offering detailed visibility into cluster costs and optimization recommendations.

Core capabilities:

- Detailed Cost Attribution: Granular cost breakdowns by multiple dimensions

- Optimization Recommendations: Actionable suggestions for cost reduction

- Multi-cloud Support: Works across AWS, GCP, and Azure environments

- Integration with CI/CD: Embed cost considerations into development workflows

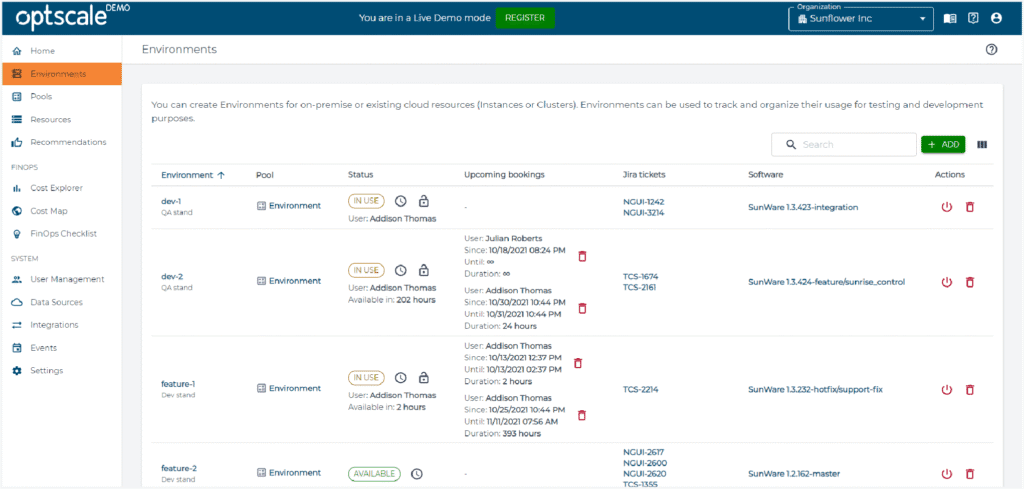

4. OptScale

OptScale offers cloud cost optimization with strong Kubernetes integration, focusing on multi-cloud cost management and optimization.

Key features:

- Multi-cloud Optimization: Cost management across different cloud providers

- Resource Optimization: Automated rightsizing and scaling recommendations

- Cost Analytics: Detailed analysis of spending patterns and trends

- Integration Capabilities: Works with existing cloud management tools

5. Goldilocks

Goldilocks provides VPA (Vertical Pod Autoscaler) recommendations for Kubernetes workloads, focusing on right-sizing individual containers within pods.

Capabilities include:

- VPA Integration: Leverages Kubernetes VPA for resource recommendations

- Visualization: Graphical representation of resource usage and recommendations

- Namespace-level Control: Granular control over which workloads to optimize

- Recommendation Quality: Filters and validates recommendations before implementation

6. SpotKube

SpotKube focuses on optimizing the use of spot instances within Kubernetes clusters, providing significant cost savings for fault-tolerant workloads.

Features:

- Spot Instance Management: Intelligent use of lower-cost compute options

- Workload Scheduling: Optimal placement of workloads on spot instances

- Fallback Mechanisms: Automatic failover to on-demand instances when needed

- Cost Optimization: Significant cost savings for appropriate workloads

Considerations for Choosing Kubernetes Cost Optimization Software

Multi-Cloud and Multi-Cluster Support

Organizations operating across multiple cloud providers or managing numerous Kubernetes clusters need software that can provide unified visibility and control. Consider solutions that offer:

- Unified Dashboards: Single pane of glass for cost management across environments

- Consistent Policies: Apply optimization policies across different clusters and clouds

- Comparative Analysis: Compare costs and performance across different environments

- Centralized Management: Manage optimization settings from a single interface

Custom Metrics Support

Different applications have unique performance characteristics that require specialized monitoring and optimization approaches. Look for software that supports:

- Custom Metrics Integration: Ability to incorporate application-specific metrics

- Flexible Alerting: Custom alert conditions based on business requirements

- Configurable Optimization: Tailor optimization strategies to specific workload types

- API Access: Programmatic access to metrics and optimization controls

Automation and Remediation Capabilities

The most effective cost optimization requires automated remediation capabilities that can respond to changing conditions without manual intervention. Key capabilities include:

- Automated Resource Adjustment: Continuous optimization of pod resource requests

- Intelligent Scaling: Context-aware scaling decisions based on multiple factors

- Anomaly Response: Automatic response to cost anomalies and unusual usage patterns

- Policy Enforcement: Automated enforcement of organizational resource policies

Manual optimization approaches often fail to keep pace with dynamic Kubernetes environments. Understanding why Kubernetes cost optimization isn’t just tweaking dials helps organizations select solutions that provide sustainable, automated optimization.

Security and RBAC Integration

Cost optimization software must integrate seamlessly with existing security and access control systems. Important considerations include:

- Role-based Access Control: Granular control over who can view and modify optimization settings

- Audit Logging: Comprehensive logging of optimization activities for compliance

- Secure Communication: Encrypted communication between optimization components

- Compliance Support: Features that support regulatory compliance requirements

Ease of Deployment and Maintenance

The complexity of implementing and maintaining cost optimization software can impact its effectiveness. Evaluate solutions based on:

- Installation Simplicity: How quickly can the software be deployed and configured

- Ongoing Maintenance: Resource requirements for maintaining the optimization system

- Documentation and Support: Quality of documentation and availability of support

- Integration Complexity: How easily the software integrates with existing systems

ScaleOps: Real-Time Kubernetes Optimization Without the Guesswork

Most cost tools stop at dashboards and suggestions. ScaleOps goes further—it’s a production-grade platform used across thousands of clusters to actively manage Kubernetes resources in real time. No restarts, no downtime, no extra YAML.

Here’s what sets it apart:

- Fully self-hosted with air-gapped and GitOps-native support

- Zero developer disruption: no new APIs, no learning curve

How It Works

Step 1: Deploy and Discover

Install with a single Helm command. Within minutes, you’ll see:

- Resource inefficiencies

- Rightsizing opportunities

- Cost-saving potential across your workloads

Step 2: Rightsize Automatically

Start with a few production workloads. Enable automated rightsizing to:

- Continuously tune CPU/memory requests

- Match actual usage patterns

- Eliminate overprovisioning without risking performance

Apply across the entire cluster via GitOps-native CRDs. New workloads inherit policies instantly.

Step 3: Optimize Min/Max Replicas

Enable predictive autoscaling to:

- Adjust replica counts based on historical traffic

- Smooth out spikes without over-scaling

- Eliminate manual HPA tuning

Step 4: Run Smart with Karpenter + Spot

Pair with Karpenter to:

- Shift non-critical pods to Spot instances

- Enforce policies that balance cost and availability

- Capture extra savings with zero performance tradeoff

A Unified Optimization Engine

ScaleOps continuously coordinates:

- Rightsizing to reclaim unused capacity

- Predictive scaling to preempt performance dips

- Eviction-safe tuning that works under real load

- Spot-aware scheduling for deeper savings

- Cost attribution that goes all the way to the container

All via a GitOps-compliant control plane that fits into your existing workflows.

You deploy it once—then your cluster keeps itself optimized.

See It for Yourself

With ScaleOps:

- Cut cloud waste instantly by up to 80%

- Avoid performance hits

- Shift engineering time from resource babysitting to actual development

Ready to stop guessing?

- Book a live demo and see real workloads optimized in real time.

- Start your free trial and see savings in under 24 hours.