Managing Kubernetes in 2025 starts with one simple truth: the control plane governs your infrastructure. Through declarative APIs, we manage clusters with Cluster API, operate stateful systems with Operators, and provision cloud resources via Crossplane.

This declarative model is the foundation of production-grade operations, enabling platforms to stay resilient through node churn, regional outages, and volatile AI workloads, without human intervention. This guide translates that model

In this article, we’ll work through the seven pillars of modern Kubernetes management. Along the way, you’ll get the key decision criteria, architectural advice, and a measurable plan to improve your platform’s performance and efficiency.

Related content: Our Guide to Automated Kubernetes Cost Optimization

Pillar 1: Cluster Lifecycle Management

Building the Cluster Factory

Effective Kubernetes management begins with a simple but profound shift in mindset: your clusters are disposable, but your applications are sacred. We must treat our clusters like cattle, not pets. Interchangeable, reproducible, and managed by an automated system, not by hand.

This pillar is about building your “cluster factory”: a set of tools and processes that can provision, upgrade, and decommission Kubernetes clusters in a predictable, safe, and automated way.

The stability of everything you run depends on the strength of this foundation.

Provisioning: Choosing Your Foundation

The first decision in the lifecycle is how a cluster is born. The right choice here prevents countless operational headaches down the line.

- Managed Services (EKS, GKE, AKS): For most teams, the default, production-proven choice is a managed offering from one of the major cloud providers. This strategic decisions offloads the immense complexity of running the Kubernetes control plane (etcd, API server, etc.). A dedicated SRE team at AWS, Google, or Microsoft handles the heavy lifting, so your team can focus on applications.

- Declarative Management (Cluster API): For organizations with multi-cloud, hybrid, or specific sovereignty needs, Cluster API (CAPI) is the industry standard. It embodies the powerful idea of “using Kubernetes to manage Kubernetes.” With CAPI, you define a desired cluster state, its version, node types, and count, as a declarative YAML object, and a management cluster works to make that state a reality. This is the foundation for true fleet management at scale.

Upgrades: The Path to Predictability

In the world of Kubernetes, upgrades are a primary source of fear, instability, and “technical debt”. A mature lifecycle strategy turns this fear into a boring, predictable process.

The “N-2” Versioning Doctrine

A simple rule demonstrates operational maturity: no production cluster should ever be more than two minor versions behind the latest stable Kubernetes release (e.g., if v1.30 is current, you should be on at least v1.28). This policy ensures you receive timely security patches and avoid the massive operational pain of trying to jump multiple versions at once.

Upgrade Patterns: Choosing the Right Strategy for Your Scale

The safest method is the Immutable Upgrade (Blue/Green) pattern. In plain terms, you use your provisioning process (e.g., CAPI) to create a brand new “green” cluster at the target version, sync all applications via GitOps, and then shift traffic at the load balancer once it’s proven healthy. This de-risks the entire process and provides an instant rollback path, making it the gold standard for critical, moderately-sized clusters.

However, for large, multi-tenant clusters with hundreds of nodes, the cost of fully duplicating the environment is often prohibitive. For these fleets, the state-of-the-art approach is a meticulously managed.

In-Place Rolling Upgrade

This involves upgrading the control plane first, followed by a phased rollout across the worker nodes, often one availability zone at a time. The safety of this process relies entirely on a mature implementation of Kubernetes’ own workload safety primitives. It’s only possible when critical applications are protected by:

PodDisruptionBudgets– preventing too many pods from being drained at oncetopologySpreadConstraints– ensuring replicas are spread across failure domains- Well-defined

PriorityClasses– managing rescheduling during the churn

This turns the upgrade from a high-risk event into a controlled, automated, and observable process suitable for large-scale infrastructure.

Decommissioning and Recovery: The Forgotten Disciplines

The lifecycle ends when a cluster is terminated or fails. A mature strategy accounts for both scenarios. Cleanly decommissioning clusters is critical in order to avoid orphaned cloud resources and runaway costs.

More importantly, you must be prepared for cluster failure. This means moving beyond “DR Theater”, the dangerous practice of having untested backup scripts. Traditional backup methods often fall short here; they might save a disk’s data but lose the essential Kubernetes resource manifests (Deployments, ConfigMaps, Secrets) that define how the application runs.

This is why the state-of-the-art approach uses a Kubernetes-native tool, with the CNCF-graduated project Velero being the most widely adopted solution. Its power lies in its ability to back up both the persistent data (via volume snapshots) and the declarative state of your applications.

Pillar 2: GitOps with Policy Guardrails

Git is Your Source of Truth, But Not Your Only Control

GitOps is no longer an emerging trend; it’s the default, production-proven operating model for managing Kubernetes. The principle is simple and powerful: your Git repository is the single source of truth for the desired state of your infrastructure. An agent running in your cluster, like ArgoCD or Flux, continuously works to make reality match what’s declared in Git.

But as platforms scale, a simple truth emerges: a basic GitOps loop isn’t enough. The real challenge is managing the inevitable exceptions, enforcing safety, and handling the dynamic state that lives outside your static YAML files. This pillar is about evolving from simple GitOps to a robust, policy-driven system that makes configuration drift impossible and empowers teams to deploy safely.

The “GitOps Gap”

The “GitOps Gap” is the space between the pure, declarative state in your repository and the messy, dynamic reality of a running cluster. Closing this gap is the hallmark of a mature management strategy.

- Dynamic State Drift: What happens when a Horizontal Pod Autoscaler (HPA) scales your application from 5 to 10 replicas due to a traffic spike? Your cluster’s live state no longer matches the

replicas: 5declared in Git. This is intentional, desired drift. The challenge is distinguishing this from unintentional drift, like a manual hotfix applied withkubectl editduring an outage that never gets committed back to Git. Mature GitOps systems have monitoring in place to alert on this unauthorized drift while allowing for legitimate, automated changes. - The Secrets Lifecycle: Committing secrets directly to Git, even encrypted, is an anti-pattern that creates risk. The modern approach is to externalize secrets. Your Git repository should only contain a reference to a secret, not the secret itself. An in-cluster tool like the External Secrets Operator (ESO) is then responsible for fetching the actual secret value from a secure vault (e.g., AWS Secrets Manager, Google Secret Manager, HashiCorp Vault) and injecting it into the running pod. This decouples the application configuration from the secret’s lifecycle, allowing security teams to rotate credentials without requiring a new application deployment.

Policy-Driven Guardrails

You cannot scale GitOps across dozens of teams and hundreds of services without automated safety checks. This is where Policy-as-Code becomes a non-negotiable component of your platform. Tools like OPA/Gatekeeper or Kyverno act as an admission controller, an automated gatekeeper that inspects every change before it’s applied to the cluster.

Instead of relying on human code reviews to catch every potential issue, you write declarative policies that enforce your operational best practices automatically. For example, you can:

- Prevent Mistakes: A policy can reject a new application that doesn’t have proper resource

requestsandlimitsset, preventing a single service from starving an entire cluster. - Enforce Security: You can mandate that all container images must come from your trusted corporate registry and have been scanned for vulnerabilities.

- Standardize Labels: A policy can require every resource to have a

teamandcost-centerlabel, ensuring you never have mysterious, untraceable cloud spend again.

By integrating policy enforcement directly into your GitOps workflow, you create a system where the “right way” is the only way. This turns your platform from a reactive one that cleans up messes into a proactive one that prevents them from happening in the first place.

Pillar 3: Observability That Rolls Up to SLOs

Stop Measuring Pods, Start Measuring Happiness

For years, we monitored our infrastructure by watching CPU utilization and memory pressure. A green dashboard meant a healthy system. This approach is now obsolete. Your users don’t care about your node’s CPU average; they care about one thing: their experience. A platform can be completely “green” on traditional metrics while delivering a slow, frustrating experience that violates user expectations.

Modern observability isn’t about dashboards; it’s about outcomes. The state of the art is to define your system’s health using Service Level Objectives (SLOs), which are precise, measurable targets for user happiness, typically focused on availability and latency (e.g., “99.9% of API requests will be served in under 200ms”). This pillar is about building a system that not only measures these SLOs but uses them to drive intelligent, automated actions.

From SLOs to Self-Tuning Systems

An SLO is a promise to your users, and mature platforms use automation to keep that promise. This reveals a critical disconnect: your promise is about application performance (like latency), but your primary autoscaler (HPA) natively understands infrastructure metrics (like CPU). Scaling on CPU alone is a lagging indicator, you’re reacting to resource pressure, not the business demand that causes the pressure. This is a recipe for violating your SLOs during a sudden traffic spike.

Mature platforms bridge this gap by teaching Kubernetes to understand business-aware signals. This is done by building a custom metrics pipeline, a system that translates application-specific metrics into a language the Kubernetes HPA can act upon.

This pipeline has three essential components:

- Application Instrumentation: Your application exposes a custom metric that represents real business activity. For an e-commerce platform, this isn’t CPU; it’s

checkout_queue_depthorrequests_per_second. - Monitoring (Prometheus): Your monitoring system scrapes and stores this custom metric.

- The Bridge (Metrics Adapter): This crucial component exposes the custom metric to the Kubernetes control plane, making it available for HPA to use as a scaling target.

The result is a self-tuning system. You can now configure your HPA to scale the checkout service based on the actual number of pending orders. You’re no longer guessing. You’re creating a direct, proactive link between business demand and resource allocation, turning your platform from one that simply runs containers into one that actively works to preserve user happiness.

Pillar 4: Security and Software Supply Chain

Security is a Pipeline, Not a Final Exam

In the early days of Kubernetes, security was often treated as a final gate: scan the image before it deploys. This approach is dangerously outdated. A modern security posture treats security as a continuous, automated discipline that is woven into every stage of the software lifecycle, from a developer’s first line of code to the application running in production.

The goal is to build a layered defense where security is automated, enforced by default, and provides visibility into every part of your supply chain. This pillar is about the three critical stages of a modern “code-to-cluster” security pipeline.

“Shift-Left” Prevention in Your CI/CD

The cheapest and most effective place to fix a vulnerability is before it ever gets close to production. This “shift-left” philosophy is about integrating automated security checks directly into your Continuous Integration (CI) pipeline.

- Vulnerability Scanning: Before a container image is pushed to a registry, your CI job must automatically scan it for known vulnerabilities using a tool like Trivy or Snyk. A build with critical CVEs should fail automatically, preventing the insecure artifact from ever being created.

- Software Bill of Materials (SBOM): A critical part of supply chain security is knowing exactly what’s inside your software. Modern CI pipelines generate an SBOM: a complete inventory of all libraries, dependencies, and their versions. This provides an essential audit trail and allows you to instantly identify all affected services when a new vulnerability like Log4j is discovered.

The Central Gatekeeper: Admission Control

An admission controller is your platform’s most powerful security checkpoint. It intercepts every single request to the Kubernetes API server and enforces your policies before an object is ever created in the cluster. This is where you enforce the “guardrails” we discussed in the GitOps pillar, using tools like OPA/Gatekeeper or Kyverno.

This is not just about blocking bad configurations; it’s about building a secure-by-default platform. For example, you can enforce policies that:

- Verify Image Signatures: The admission controller can check for a cryptographic signature on an image using Cosign/Sigstore, proving it came from your trusted CI pipeline and hasn’t been tampered with.

- Enforce Pod Security Standards (PSS): You can enforce that all workloads run with hardened security contexts, such as disallowing them from running as the root user or accessing the host filesystem. This drastically reduces the blast radius if a pod is compromised.

- Mandate Network Policies: You can require that every new namespace must have a default-deny Network Policy, ensuring that pods are isolated by default and can only communicate with other services that are explicitly allowed.

“Shift-Right” Detection at Runtime

You must assume that despite your best preventative measures, a threat may still make it into your running cluster. Runtime security is about detecting and responding to active threats.

Traditional security tools are often blind to threats inside a container. The state-of-the-art solution is to use tools that leverage eBPF, a revolutionary Linux kernel technology that provides deep, real-time visibility into system behavior without modifying applications.

Tools like Falco or Tetragon use eBPF to monitor for suspicious activity at the kernel level. They can instantly detect and alert on anomalous behavior that could indicate a breach, such as:

- A shell being spawned inside a production container

- An application process trying to write to a sensitive system directory like

/etc - An outbound network connection to a known malicious IP address

This “shift-right” capability is the final, essential layer of a defense-in-depth strategy, providing the visibility you need to detect and contain threats that are already active in your environment.

Pillar 5: Scaling and Cost Optimization

Stability Before Savings

In Kubernetes, performance tuning and cost optimization are two sides of the same coin. The ultimate goal is efficiency: using the exact amount of resources necessary to meet your performance SLOs, and no more. However, the traditional tools for this have significant flaws that often force a trade-off between cost and stability.

The modern doctrine demands a more intelligent approach. It starts by scaling on metrics that directly reflect user experience and then uses real-time, SLO-aware analysis to continuously rightsize workloads without guesswork or risk.

The Event-Driven Shift with KEDA

As we established in the observability pillar, scaling on CPU or memory is a reactive, lagging indicator of user pain. A mature platform scales on business signals that are leading indicators of load.

This is where KEDA (Kubernetes Event-driven Autoscaling) has become the star of the show. KEDA extends the Kubernetes HPA with a rich library of “scalers” that can connect to over 50 event sources. Instead of a basic HPA, you create a KEDA ScaledObject that scales your application based on a direct measure of business demand, like the number of messages in an SQS queue. Your system now scales up proactively as the queue grows, before users experience latency, and can even scale down to zero when idle, saving costs.

Node Provisioning: From Static Groups to Just-in-Time Compute

Scaling your pods is only half the battle; you also need the underlying node infrastructure to respond efficiently.

- Traditional Node Groups: The classic approach uses Cluster Autoscaler to manage a set of predefined node groups (or ASGs). When more capacity is needed, it adds another node of a fixed instance type. This is reliable but often inefficient, leading to poor “bin packing” and significant waste from half-empty nodes.

- Workload-Aware Provisioning (Karpenter): The modern solution is to use a provisioner like Karpenter. It doesn’t manage static groups. Instead, it watches for pending pods and provisions the perfect-sized, cheapest possible node for that specific workload, just-in-time. This leads to dramatically better utilization and lower costs.

The Challenge of Continuous Optimization

Even with KEDA and Karpenter, you are still left with the core challenge of rightsizing. The default tool, the Vertical Pod Autoscaler (VPA), is too slow and disruptive for production use, relying on long-term historical data and often fighting with HPA.

This is where a holistic, autonomous approach becomes necessary. An intelligent platform must connect all three layers—pod scaling, pod rightsizing, and node scaling—into a single, coordinated system.

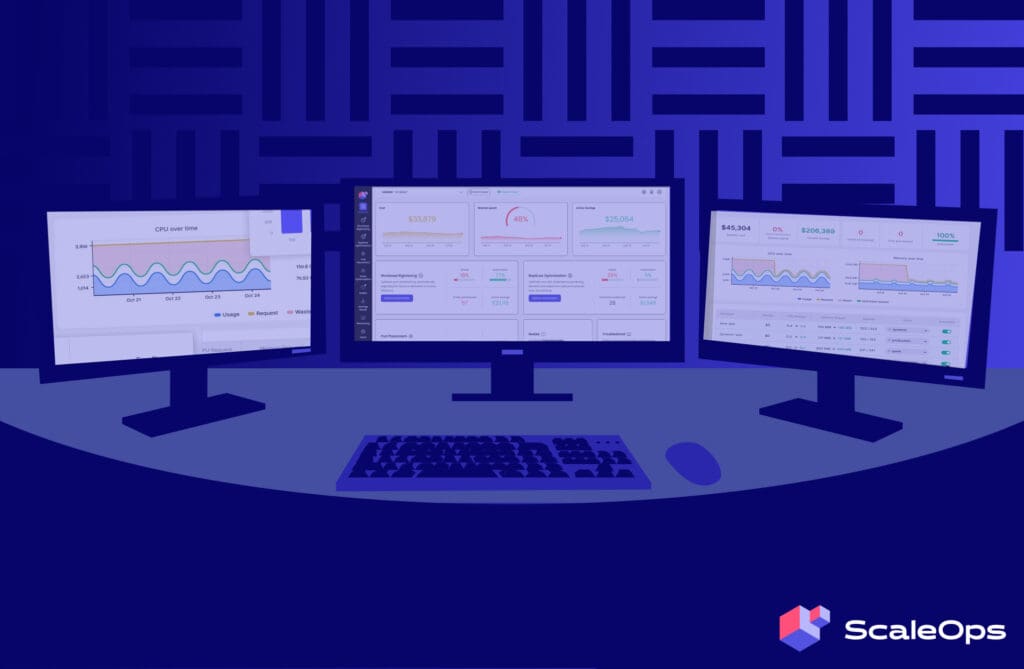

The ScaleOps Platform is designed to be this unifying intelligence layer.

- First, it provides continuous Rightsizing powered by real-time telemetry. This automates safe and accurate resource recommendations without the flaws of historical decay.

- Second, Replica Optimization introduces proactive behavior, recognizing seasonal patterns and adjusting scaling policies to prevent SLO violations before they happen.

- Finally, it includes intelligent Node Optimization, ensuring that pod-level efficiencies translate directly into infrastructure savings by coordinating with provisioners like Karpenter and managing node consolidation safely and more effectively.

Pillar 6: AI/ML on Kubernetes

More Than Just Another Workload

Running AI and Machine Learning workloads on Kubernetes is the new frontier of platform management. These are not typical stateless web applications. AI workloads are resource-intensive, stateful in unique ways (models and data), and highly sensitive to scheduling and hardware topology. Simply deploying a model in a container is a recipe for inefficiency and poor performance.

A mature platform treats AI as a first-class citizen with specialized requirements. The goal is to transform your Kubernetes cluster into a shared, efficient, and high-performance “GPU cloud,” turning scarce and expensive accelerator hardware into a reliable, multi-tenant utility. This pillar covers the state-of-the-art practices for managing these demanding workloads.

Accelerator Management: Taming the GPU

The primary challenge with AI is managing accelerators like GPUs. A GPU is not like a CPU core; it has its own memory, specific driver requirements, and cannot be easily shared by default.

- Exposing Hardware: The first step is making the hardware visible to Kubernetes. This is done through device plugins. For NVIDIA GPUs, their official device plugin exposes each GPU on a node as a schedulable resource, allowing you to request

nvidia.com/gpu: 1just like you wouldcpu: 1. - Fractional GPUs and Sharing: A single powerful GPU is often too much for one inference task, leading to massive waste. The modern solution is to use technologies like NVIDIA Multi-Instance GPU (MIG), which can partition a single physical GPU into multiple, fully isolated virtual GPUs. This allows you to run several different models on one piece of hardware safely, dramatically increasing utilization and lowering costs.

The “Time-to-First-Token” Problem: Mitigating Cold Starts

For user-facing AI applications, like a chatbot, the “cold start” problem is a critical user experience issue. Loading a multi-gigabyte model from storage into GPU memory can take tens of seconds or even minutes. A user will not wait that long for their first response. This “time-to-first-token” is a key performance metric.

Mature platforms solve this with several patterns:

- Model Servers: Instead of having each pod load its own model, you use a dedicated model server like KServe, vLLM, or TensorRT-LLM. These systems are optimized for serving models, often keeping popular models “warm” and ready in GPU memory.

- Warm Pools: Just as with stateless apps, you can maintain a warm pool of nodes with the necessary GPU drivers and container images pre-pulled. When a new inference pod is needed, it can be scheduled instantly on a ready node.

Intelligent Scheduling: Placing Workloads Wisely

Where an AI workload runs matters immensely. A pod that needs to communicate with a specific GPU over a high-speed NVLink connection must be scheduled on the right node and with the correct NUMA (Non-Uniform Memory Access) alignment.

- Topology Awareness: Kubernetes is becoming more topology-aware, but this often requires using tools like the Node Feature Discovery (NFD) operator. NFD labels your nodes with detailed hardware information, allowing you to use

nodeSelectorsor affinity rules to place your pods with precision. - Batch vs. Online Inference: AI workloads are not monolithic. You have long-running batch jobs and short-lived, latency-sensitive online inference requests. A mature platform uses different scheduling strategies for each, often using KEDA to scale batch jobs from queues and a standard HPA to scale online services based on latency or tokens-per-second.

From Orchestration to Autonomous Optimization

As we’ve seen, a production-grade AI platform requires orchestrating a complex toolchain: device plugins, model servers, topology-aware schedulers, and more. However, even with the best tools, platform teams are often left reacting to problems. The provisioning bottleneck remains a primary cause of cold-start latency, and inefficient GPU sharing still leads to massive waste.

This is where the state of the art moves beyond orchestration to autonomous optimization. An intelligent platform like ScaleOps provides the unifying layer that addresses these systemic issues. It solves the provisioning bottleneck with proactive signaling to ensure GPU nodes are ready the moment they’re needed. Furthermore, it transforms GPU scheduling from a wasteful guessing game into an automated science, using resource-aware “bin packing” to safely co-locate multiple models on a single GPU. This maximizes the utilization and ROI of your most expensive hardware, turning a complex collection of tools into a truly efficient AI platform.

Pillar 7: Multi-Cluster and Fleet Governance

From a Single Cluster to a Global Fleet

For any successful organization, the Kubernetes journey rarely stops at a single cluster. Growth, regulation, and the need for resilience inevitably lead to a multi-cluster reality. This isn’t a sign of failure, it’s a sign of scale. Your platform is now a fleet, distributed across regions, clouds, or environments.

The challenge is to manage this fleet without multiplying your operational overhead. A command that is easy to run on one cluster becomes a source of dangerous inconsistency when run manually across dozens. The goal of fleet governance is to apply the same principles of declarative automation that work for a single cluster to your entire environment, ensuring consistency and safety at scale.

Why Go Multi-Cluster?

Organizations adopt a multi-cluster strategy for critical business reasons:

- Resilience and High Availability: To survive a complete zonal or regional cloud provider outage, you need to be able to fail over traffic to a replica of your application running in a different geographic location.

- Data Residency and Compliance: Regulations like GDPR require user data to be stored and processed within specific geographic boundaries, necessitating dedicated clusters in those regions.

- Tenant and Environment Isolation: Providing separate clusters for different business units or for your

dev,staging, andprodenvironments provides a strong security and resource isolation boundary.

The Centralized Configuration Pattern

How do you deploy the same application to ten different clusters, each with a slight variation (like a different database endpoint or domain name)? The answer is not to copy and paste your YAML ten times.

The state of the art is to use a GitOps fleet management pattern. Modern GitOps tools like ArgoCD (with ApplicationSets) and Flux are built for this. You create a single, templated definition of your application. The GitOps tool then uses metadata about your clusters (e.g., their name or region label) to automatically generate and apply the correct, context-specific configuration to each one. This allows you to manage the configuration for your entire fleet from a single source of truth, eliminating drift and manual effort.

Consistent Governance Across the Fleet

An inconsistent security policy is a critical vulnerability. You must ensure that every cluster in your fleet adheres to the same security standards, RBAC permissions, and resource quotas.

This is a powerful application of Policy-as-Code, managed via GitOps. Your security policies (written in OPA/Gatekeeper or Kyverno) are stored in a central Git repository. Your GitOps controller is then responsible for syncing this “policy bundle” to every cluster. When your security team needs to roll out a new policy, they make a single commit to the central repo, and the change is automatically enforced across the entire global fleet. This is the foundation of scalable, auditable governance.

From Fleet Governance to Autonomous Optimization

Once you have consistent configuration and policy, the final frontier is applying consistent, autonomous resource optimization across the entire fleet. This is where a platform like ScaleOps extends the governance model. It operates on a self-hosted model, deploying a small-footprint set of Kubernetes-native components to each cluster, which ensures your data never leaves your environment and is fully compatible with air-gapped requirements. From a single dashboard, you can connect to your entire estate, creating a unified view of the health and efficiency of your global fleet. This architecture allows ScaleOps to deliver all the benefits we’ve discussed—from proactive scaling and continuous rightsizing to intelligent node optimization—to every cluster, no matter where it’s located, turning fleet management from a challenge of manual governance into an opportunity for autonomous, global optimization.

Conclusion: The Future of Kubernetes in the Age of AI

We’ve journeyed through the seven pillars of modern Kubernetes management, from the foundational discipline of the cluster lifecycle to the global scale of fleet governance. The common thread is a relentless drive toward declarative automation, where operators define the “what,” and an increasingly intelligent platform handles the “how.”

This trend points toward a more radical future, especially in a world of advancing AI. Imagine a world of “intent-driven computing,” where developers state a business outcome, and a collection of AI agents build, deploy, secure, and continuously manage the required applications on demand.

This isn’t science fiction; it’s the logical extension of the principles we’ve discussed. Such a future will be built upon a universal, declarative control plane—an evolution of the Kubernetes API model—capable of orchestrating resources from massive cloud data centers to the network edge. In this world of bursty, on-demand AI workloads, elasticity becomes the single most critical capability of the platform.

The core challenge, therefore, evolves from managing static infrastructure to autonomously managing a dynamic, on-demand supply chain of resources, applications, and AI models that meet users and business demands. Navigating this future is fundamentally a global optimization problem, and success requires a partner whose core mission is to codify reliability, performance, and efficiency into the platform itself.