In the world of Kubernetes, managing resources efficiently is key to optimizing performance and minimizing costs. ScaleOps streamlines this process by automating resource allocation, allowing platform teams to focus on innovation while providing product teams with the insights they need to optimize their applications. This post explores how ScaleOps simplifies Kubernetes resource management, enhances platform engineering, and ensures secure, efficient operations.

Kubernetes has become the backbone of modern application deployment, offering the flexibility and scalability necessary for managing containerized workloads at scale. However, with this power comes significant complexity, particularly when it comes to managing the resources that each pod is requesting. Mismanagement of these resources can lead to inefficiencies, increased costs, and even application downtime.

This is where ScaleOps comes into play. ScaleOps is an automatic resource management platform for Kubernetes designed to optimize resource utilization, improve cluster performance, and enhance availability. It accomplishes this by automatically adjusting the resource requests of pods, removing the need for manual tuning and ensuring that resources are used efficiently. In this blog post, we’ll explore how ScaleOps fits into the broader context of platform engineering and why it is essential for delivering a seamless experience for both platform and product teams.

Shielding Engineers From the Complexity of Resource Management

One of the primary responsibilities of platform engineering teams is to abstract the complexities of Kubernetes from the engineering teams who develop and deploy applications. Kubernetes, while powerful, requires careful management of resource requests—CPU, memory, and storage—to ensure that applications run smoothly without over-provisioning or under-provisioning resources.

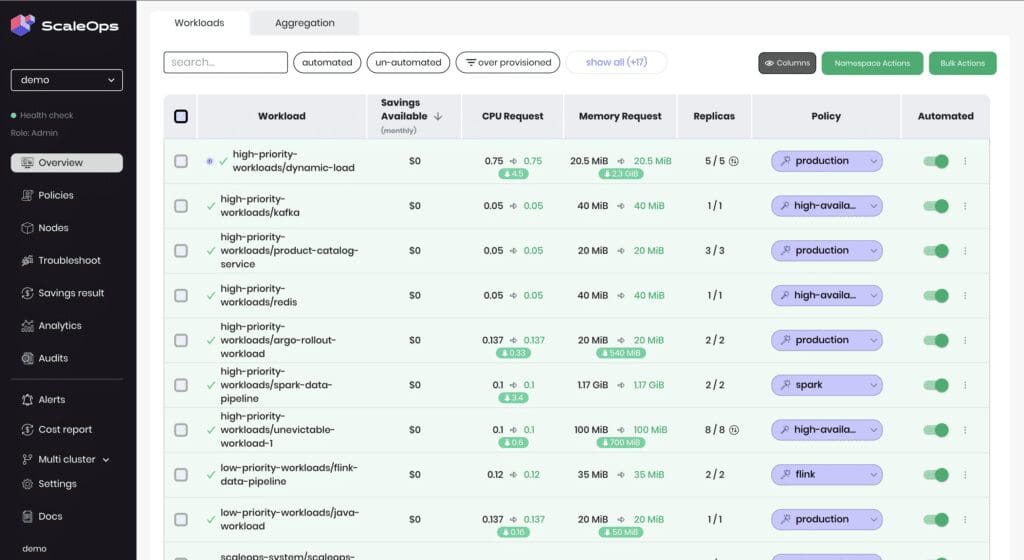

ScaleOps automates this process, dynamically adjusting the resource requests of running pods based on real-time usage data. This not only optimizes resource utilization but also enhances the overall performance and availability of the cluster. For platform teams, this automation means that the complexity of resource management is hidden from the product teams, allowing them to focus on building and delivering business value rather than worrying about the intricacies of Kubernetes.

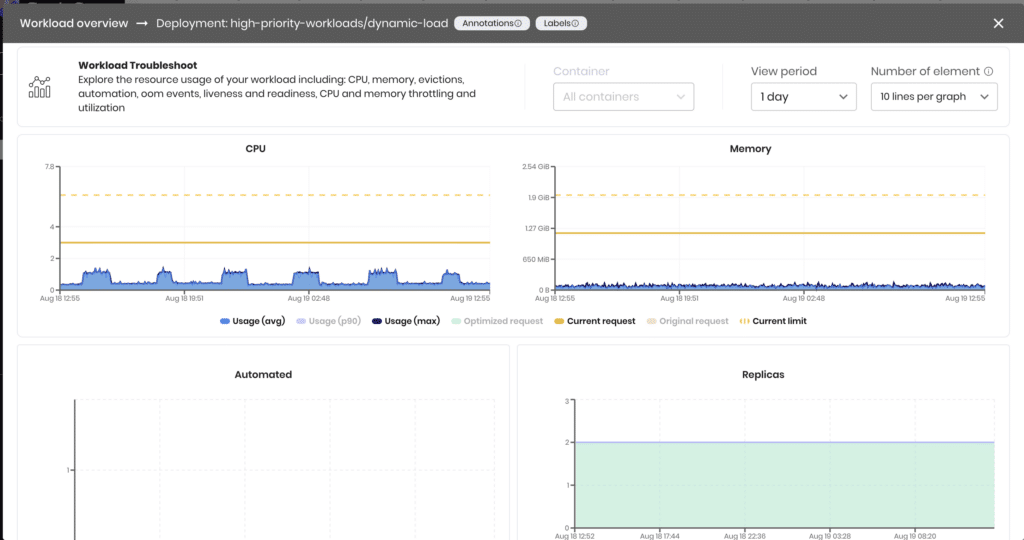

At the same time, ScaleOps empowers product teams by providing them with visibility into the behavior of their workloads in production. This balance between abstraction and transparency is crucial: while the underlying complexity is managed by the platform, product teams still have access to the insights they need to understand how their applications are performing, identify potential bottlenecks, and optimize their code accordingly.

Empowering Teams with Contextual Visibility

Altough ScaleOps does the heavy lifting of continuously managing and optimizing pod level resources providing visibility into workload behavior is essential for product teams to make informed decisions about application performance and scaling.

However, too much information can overwhelm teams, leading to confusion and inefficiency. ScaleOps addresses this by offering a platform that provides the necessary context without exposing unnecessary complexity.

Through intuitive dashboards and monitoring tools, ScaleOps delivers real-time insights into resource usage, performance metrics, and scaling events. Product teams can easily see how their applications are using resources, what adjustments have been made, and what impact these changes have on performance. This contextual visibility allows teams to stay informed and make data-driven decisions, all while relying on the platform to manage the underlying complexity.

By striking this balance, ScaleOps not only simplifies the day-to-day operations of platform teams but also empowers product teams to optimize their applications with confidence, knowing that the platform is handling resource management efficiently.

The Importance of Onboarding and User Experience

A powerful platform is only as good as its adoption. For ScaleOps to be successful, it needs to provide an easy onboarding process and a clear, intuitive user experience.

Onboarding with ScaleOps begins with a straightforward and quick installation process, allowing teams to get up and running in minutes. The platform’s design focuses on minimizing friction, enabling both platform and product teams to start deriving value from the platform almost immediately.

Beyond the initial setup, ScaleOps offers an intuitive user interface that simplifies interaction with the platform. Whether it’s accessing dashboards, setting up role management, or monitoring resource usage, the platform is designed to be user-friendly and accessible, even for teams that may not be deeply familiar with Kubernetes.

A seamless onboarding process and a user-friendly interface are key to ensuring that teams not only adopt the platform but also leverage its full potential. ScaleOps is committed to providing both, making it easier for organizations to realize the benefits of automatic resource management without the typical barriers to entry.

Providing a “Golden Path” Together with Customization Flexibility

One critical aspect of platform engineering is providing engineering teams with a “golden path”—a set of best practices that are baked into the platform, providing engineers with out-of-the-box solutions to different everyday use cases. For resource management in Kubernetes, this “golden path” often revolves around scaling policies that dictate how resources are allocated as demand changes.

ScaleOps offers a range of out-of-the-box scaling policies that are rooted in industry best practices. These policies are designed to provide an optimized, reliable approach to scaling that can be applied across various types of workloads. By implementing these best-practice scaling policies as the “golden path,” ScaleOps ensures that product teams can follow a well-established and proven route for managing resources, minimizing the need for trial and error allowing engineers to focus on what they do best…build products and provide business value instead of dealing with underlying infrastructure concerns.

However, no matter how proven the “golden path” is and how many use cases the platform serves out of the box, there are always cases in which a unique scenario needs a particular and specific solution. In these cases, a good platform is measured by how flexible it is and how easy it allows engineering teams to configure the platform to accommodate the specific need.

Recognizing this, ScaleOps provides the flexibility for teams to easily customize these scaling policies according to their unique use cases. Whether it’s adjusting thresholds, modifying scaling behaviors, or setting specific resource limits, ScaleOps makes it simple for teams to tailor the platform to their needs without deviating from the broader organizational standards.

By providing a well-defined “golden path” for resource management while also offering the flexibility to customize and automatically match scaling policies, ScaleOps enables platform teams to maintain consistency and reliability across the organization. At the same time, product teams retain the ability to fine-tune their environments to meet specific performance needs, all within the framework of best practices.

Robust Role Management for Secure and Efficient Operations

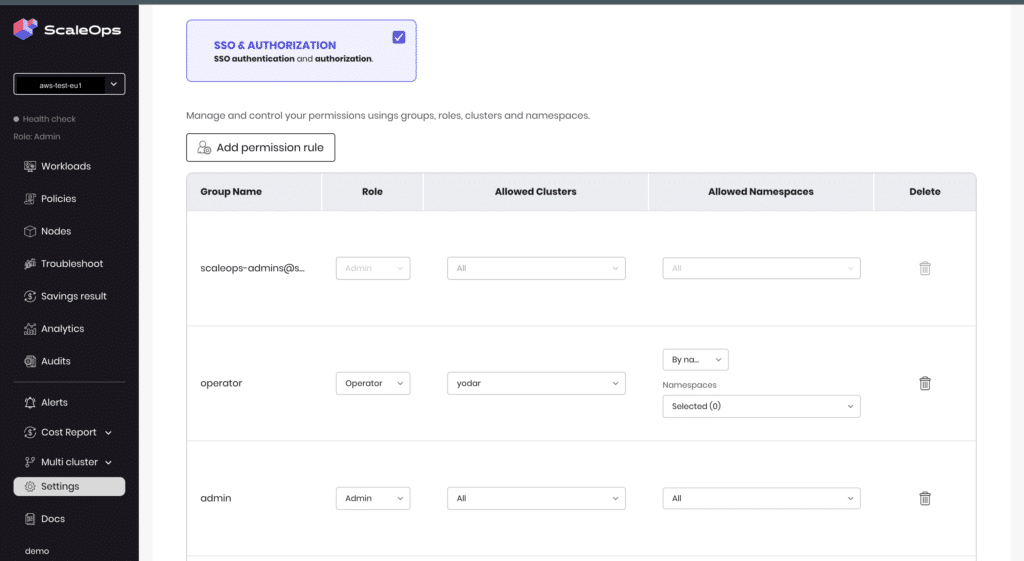

As organizations scale their Kubernetes environments, managing access and permissions becomes increasingly important. Different teams within an organization—such as development, QA, and operations—need to interact with different workloads, often within specific namespaces. Without a robust role management system, there’s a risk of unauthorized access, security breaches, and operational inefficiencies.

ScaleOps provides a robust role-based access control (RBAC) mechanism. This allows platform teams to define and enforce permissions, ensuring that teams can only view and act on the workloads they are authorized to manage. By clearly delineating access rights, ScaleOps helps maintain security, prevent unauthorized changes, and streamline operations across the organization.

This role-based approach not only enhances security but also simplifies the user experience for product teams. By limiting their view to only the resources relevant to their responsibilities, teams can focus on their specific tasks without being distracted by unrelated workloads.

Conclusion: Transforming Platform Engineering with ScaleOps

Automatic resource management is a crucial component of modern platform engineering, particularly in the context of Kubernetes. With ScaleOps, platform teams can effectively hide the complexity of resource management while empowering product teams with the visibility and context they need to properly understand their workloads dynamics. The robust role management ensures secure and efficient operations, while a focus on onboarding and user experience drives adoption and long-term success.

As Kubernetes continues to be the platform of choice for containerized applications, platforms like ScaleOps are essential for managing the complexity and ensuring that both platform and product teams can operate efficiently and effectively.

Ready to simplify Kubernetes resource management and optimize your cluster’s performance? Experience how ScaleOps can transform your platform engineering today. Visit ScaleOps to learn more and start your journey toward effortless Kubernetes management.