Kubernetes has become the de facto standard for container orchestration, but managing resources efficiently remains a challenge. Two prominent solutions in this space are ScaleOps and Goldilocks. While both platforms aim to optimize Kubernetes resource management, ScaleOps offers a suite of features that make it a more comprehensive and powerful choice. Let’s delve into the detailed comparison and highlight why ScaleOps stands out.

What Are ScaleOps and Goldilocks?

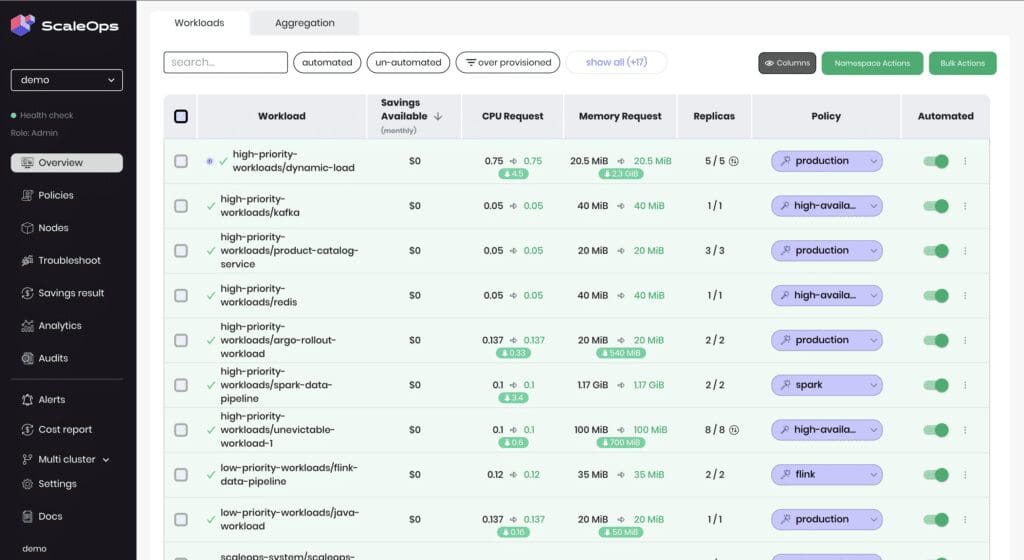

ScaleOps provides real-time automated optimization, while Goldilocks offers static recommendations for manual implementation.

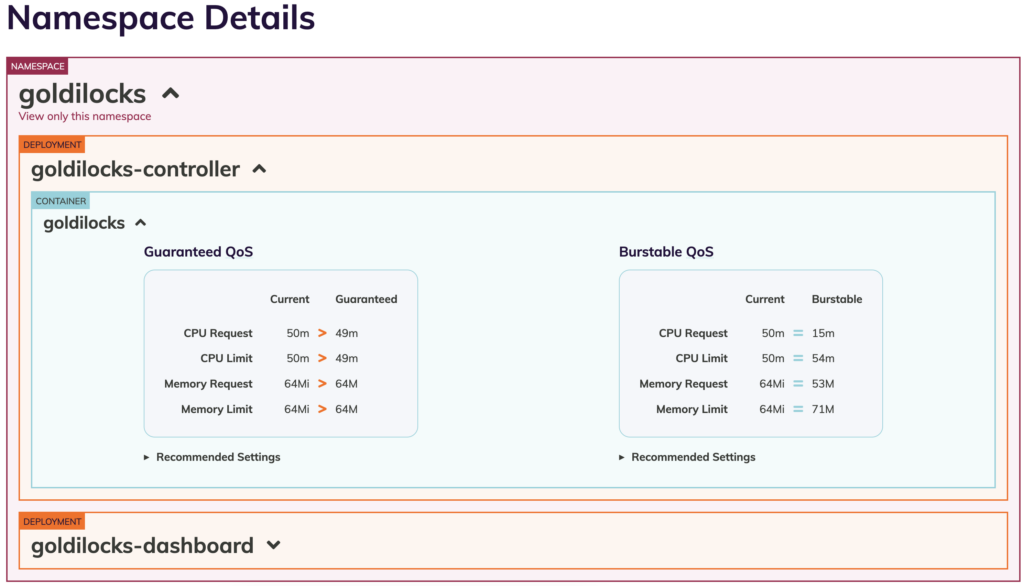

Goldilocks is an open-source utility that provides static recommendations for Kubernetes resource requests and limits. It runs the VPA in recommendation mode and presents suggested values in a dashboard.

ScaleOps is a complete, autonomous platform for real-time Kubernetes resource management. It provides continuous, automated optimization based on live cluster conditions and workload behavior.

Key differences:

- Automation level: ScaleOps automatically applies changes; Goldilocks provides recommendations only

- Optimization scope: ScaleOps handles full resource management; Goldilocks focuses on pod rightsizing

- Production readiness: ScaleOps is built for mission-critical environments; Goldilocks is primarily for analysis

Installation and Onboarding

Installation comparison:

- Getting started: ScaleOps includes out-of-the-box policies; Goldilocks requires configuration

- Setup time: Both tools install in under 2 minutes via Helm

- Deployment options: Both support self-hosting for security and control

Pod Rightsizing

Optimizing container resources is crucial for maintaining application performance while minimizing costs.

| Feature | ScaleOps | Goldilocks |

| Optimization type | Real-time, continuous | Static recommendations |

| Implementation | Automated changes | Manual application requires |

| Workload support | All workload types | Native Kubernetes controllers only |

| Training period | Immediate optimization | Requires historical data collection |

- Continuous Optimization: Unlike Goldilocks, which typically provides static recommendations, ScaleOps continuously monitors and adjusts container resource allocations. This ensures that resources are always optimized according to the current workload demands.

- Context-Aware and Fast Reaction: ScaleOps combines history-based reactive models with forward-looking proactive and context-aware models, allowing it to intelligently scale workload resource requests to adjust for real-time changes in resource demands.

- Pod Specification Level: ScaleOps fine-tunes the resource allocations at the pod specification level, meaning that both CPU and memory resources are adjusted in real-time to meet the needs of each container. This helps prevent over-provisioning, which can lead to wasted resources, and under-provisioning, which can cause performance issues.

- Supported Workloads: ScaleOps automatically detects and identifies any type of workload, including custom workloads. It optimizes pods not owned by native Kubernetes controllers with out-of-the-box tailored policies, providing a more comprehensive solution.

Goldilocks:

- Primarily focuses on providing initial rightsizing recommendations only for native Kubernetes workloads based on historical usage data. While useful, this approach lacks the continuous optimization necessary to adapt to changing workload demands.

- Rightsizing recommendations are based only on historical usage patterns, requiring a long period of training time to get workable recommendations

Custom Scaling Policies

Effective scaling policies maintain performance and availability in dynamic environments.

ScaleOps scaling advantages:

- Out-of-the-box policies: Zero-effort setup with immediate savings

- Application-aware: Automatically detects and optimizes based on workload type

- Custom strategies: Create bespoke policies for specific metrics and thresholds

Goldilocks limitations:

- Basic recommendations: Limited customization without application context

Auto-Healing and Fast Reaction

In a Kubernetes environment, unexpected issues such as sudden traffic spikes or node failures can occur at any time. ScaleOps provides robust auto-healing and fast reaction mechanisms to maintain stability and performance.

ScaleOps:

- Proactive and Reactive Mechanisms: ScaleOps employs a combination of proactive and reactive strategies to address issues. Proactively, it monitors for potential problems and makes adjustments before they impact performance. Reactively, it quickly responds to unexpected events such as traffic surges or node failures.

- Issue Mitigation: The platform automatically mitigates issues caused by sudden bursts in traffic or stressed nodes, ensuring that applications remain stable and performant. For instance, if a node becomes stressed due to high CPU or memory usage, ScaleOps can redistribute the load or scale up resources to maintain performance.

- Zero Downtime Optimization: By using sophisticated algorithms and zero downtime mechanisms, ScaleOps ensures that optimizations are applied without disrupting ongoing workloads. This is crucial for maintaining the availability of mission-critical applications.

Goldilocks:

- Lacks proactive auto-healing capabilities and relies more on static recommendations, which may not be sufficient to handle dynamic and unpredictable Kubernetes environments.

Integration and Compatibility

Production environments require seamless integration without disrupting existing workflows.

Integration comparison:

Goldilocks operates by creating VPA objects for targeted workloads. It works in read-only, recommendation-only mode. Manual intervention is required to apply suggestions to CI/CD pipelines.

ScaleOps integrates natively with existing HPA and KEDA configurations. It enhances your current stack with predictive scaling and real-time rightsizing. GitOps integration with ArgoCD and Flux ensures automated changes are auditable.

Key integration benefits:

- No stack replacement: Works with your existing autoscaling tools

- Policy-aware automation: Changes follow organizational governance rules

- Production-ready: Designed for mission-critical environments

When to Choose Each Tool

The right choice depends entirely on your team’s operational maturity and the environment you are managing.

Choose Goldilocks if:

- You are in the early stages of Kubernetes adoption and need a simple way to get initial resource recommendations.

- You are running non-production or development clusters where manual tuning is acceptable.

- Your primary goal is to gather data for a one-time rightsizing effort.

- You have a small number of services, and your engineering team has the bandwidth to manually review and apply recommendations.

Choose ScaleOps if:

- You are running mission-critical production workloads at scale.

- You need to reduce cloud costs without sacrificing performance or reliability.

- Your engineering team is spending too much time on manual resource tuning and reactive troubleshooting.

- You require a fully automated, hands-off system that operates continuously in real time.

- You need to optimize resources beyond just pods, including replicas, node selection, and pod placement.

Making the Right Choice for Your Kubernetes Environment

The choice between Goldilocks and ScaleOps is a choice between information and action. Goldilocks provides valuable information, giving you a snapshot of potential optimizations. It answers the question, ‘What could my resource requests look like?’

ScaleOps delivers action. It provides a continuous, autonomous control loop that ensures your resources are always aligned with real-time demand. It answers the question, ‘How can I guarantee my cluster is always optimized for cost and performance without manual intervention?’

For teams operating at scale in production, where reliability and efficiency are non-negotiable, the need for an automated, active system is clear. ScaleOps provides the production-grade automation required to manage the complexity of modern Kubernetes environments.

Ready to revolutionize your Kubernetes resource management ? Try ScaleOps today and experience the difference. Visit ScaleOps to get started!

Frequently Asked Questions About ScaleOps vs Goldilocks

Which tool is better for teams new to Kubernetes resource optimization?

ScaleOps provides immediate value with out-of-the-box automation, while Goldilocks requires manual implementation of recommendations.

Can I use ScaleOps and Goldilocks together in the same cluster?

It’s redundant since ScaleOps provides superior real-time analysis that replaces Goldilocks’ static recommendations.

How do the solutions compare in terms of resource overhead and performance impact?

Both are lightweight, but ScaleOps operates as an intelligent control plane with minimal resource consumption.

What happens to my existing VPA configurations when implementing either tool?

Remove VPA configs for ScaleOps-managed workloads to avoid conflicts, as ScaleOps provides advanced vertical scaling.

Which tool provides better visibility into optimization decisions and changes?

ScaleOps offers comprehensive observability with cost monitoring and ROI tracking, while Goldilocks provides basic recommendation dashboards.