Kubernetes Resource Management

on Auto-Pilot

ScaleOps is the only platform that fully automates resource optimization based on real-time demand and application context.

The Complete Platform for Kubernetes Resources

Real-Time Pod Rightsizing

Real-Time Pod Rightsizing

ScaleOps automates resource requests and limits per pod based on real-time demand, freeing engineers from repeated manual tuning of CPU and memory allocation across the cluster. This results in up to 80% cloud cost savings.

Start saving in minutes, not days

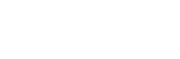

Replicas Optimization

Replicas Optimization

Automatically scale horizontally, before demand hits

Automated Smart Pod Placement

Automated Smart Pod Placement

ScaleOps automates and optimizes the placement of unevictable pods by ensuring pods are correctly allocated on the best nodes to allow underutilized nodes to scale down, resulting in dramatic cloud cost savings by up to 50% without sacrificing performance.

Instant Value with Seamless Automation

Install with a single helm command. That’s it.

Cluster level & Workload level Troubleshooting

Cluster level & Workload level Troubleshooting

ScaleOps provides dashboards and tools for diagnosing issues from cluster-level to specific workloads, enhancing visibility and enabling proactive management.

Your workloads, for 80% less

Cost Monitoring

Cost Monitoring

ScaleOps offers a comprehensive view of compute, network, and GPU costs, enabling better cloud spend management and significant saving

Here’s What People Are Saying About Us

Here’s what people are saying about us