Effective resource management in Kubernetes environments is crucial for optimizing application performance and reducing operational overhead. Autoscaling solutions play a vital role in dynamically adjusting resource allocation based on workload demand. In this article, we’ll compare ScaleOps, Horizontal Pod Autoscaler (HPA), Vertical Pod Autoscaler (VPA), and Kubernetes-based Event-Driven Autoscaling (KEDA) to understand their differences and strengths.

Understanding Autoscaling

Autoscaling in Kubernetes enables automatic resource allocation adjustment to meet changing workload demands, ensuring optimal performance and resource utilization.

Cluster Autoscaler

Cluster Autoscaler adjusts the size of the Kubernetes cluster itself by adding or removing nodes based on resource utilization and pod scheduling. It examines pod resource requests and scheduling constraints to determine whether additional nodes are required to meet demand or if existing nodes can be safely removed to optimize resource utilization. This dynamic scaling ensures efficient resource allocation and can handle sudden increases in workload demand by automatically provisioning new nodes as needed. By considering both pod resource requests and scheduling constraints, Cluster Autoscaler effectively balances resource availability and workload distribution within the Kubernetes cluster.

Karpenter is a well-maintained and widely used open-source tool that takes a more proactive approach to scaling compared to Cluster Autoscaler. It provisions individual nodes directly in your cloud provider, tailoring them to the specific resource requirements of unscheduled pods. This leads to better resource utilization and potentially lower costs.

Horizontal Pod Autoscaler (HPA)

The Horizontal Pod Autoscaler (HPA) is a key tool in Kubernetes for dynamically adjusting the number of pod replicas based on CPU utilization or custom metrics. It is particularly effective for applications with variable workloads, allowing for automatic scaling to maintain performance and optimize costs. The motivation behind HPA is to handle fluctuating demand seamlessly, ensuring applications remain responsive and efficient without manual intervention. HPA is best used for workloads that experience unpredictable traffic patterns or cyclical demand, such as web applications with varying user loads throughout the day.

Kubernetes-based Event-Driven Autoscaling (KEDA)

KEDA serves as an advanced Horizontal Pod Autoscaler (HPA) scaler, uniquely scaling workloads based on external events like message queue depth or custom metrics. It provides an ideal solution for event-driven architectures, where workload demand fluctuates based on external triggers. KEDA allows Kubernetes deployments to scale dynamically in response to events, ensuring optimal resource allocation and application responsiveness.

Vertical Pod Autoscaler (VPA)

VPA dynamically adjusts pod resource requests and limits based on observed resource utilization patterns, offering granular control over resource allocation within individual pods. While VPA provides significant value by optimizing resource usage and preventing both over-provisioning and under-provisioning, it requires careful fine-tuning and monitoring to ensure optimal performance. However, one drawback is its inability to seamlessly integrate with the Horizontal Pod Autoscaler (HPA), as both tools operate on different aspects of resource management.

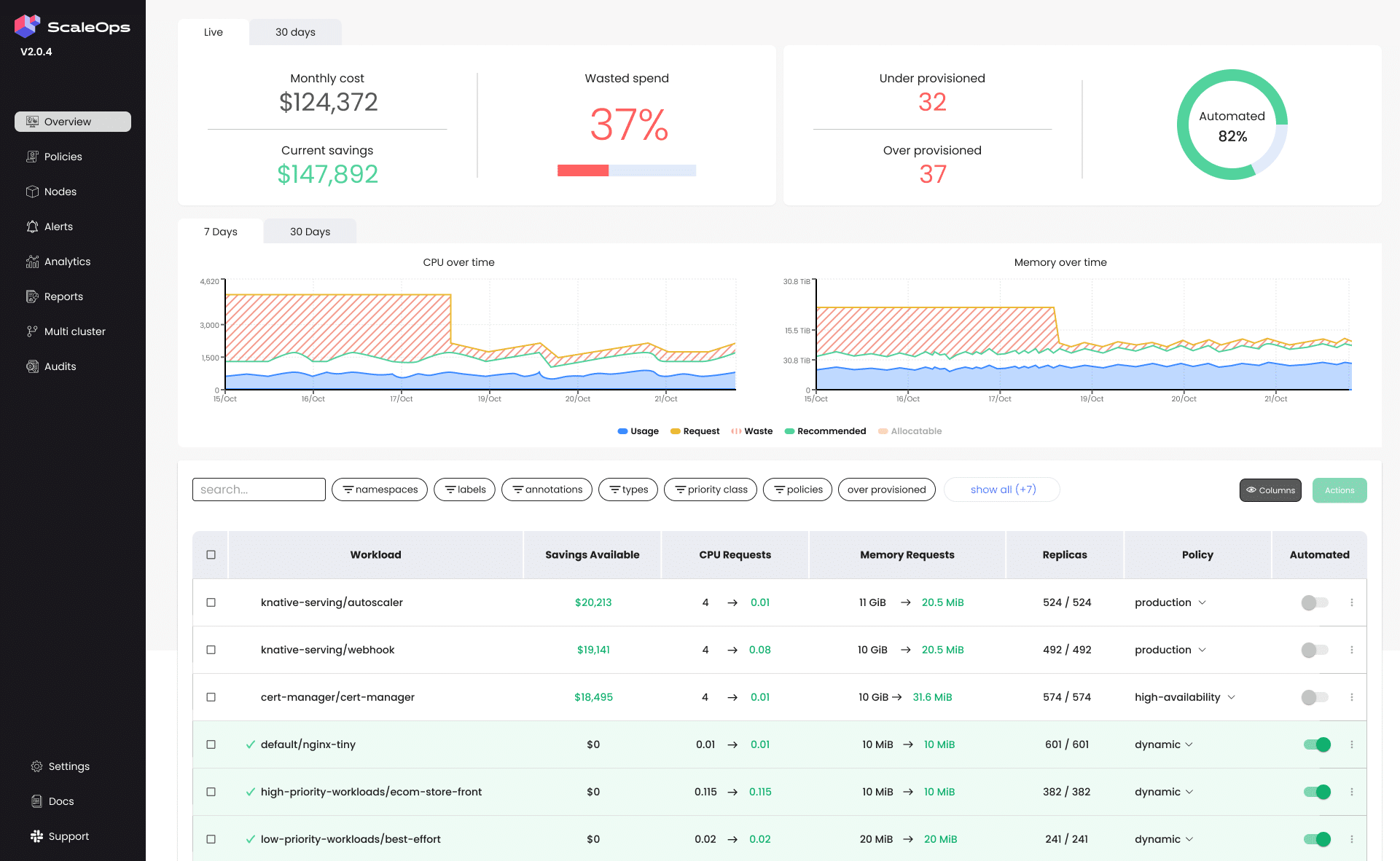

ScaleOps: A Comprehensive Solution

ScaleOps integrates with Cluster Autoscaler HPA, KEDA, and VPA, offering a comprehensive solution for Kubernetes resource management. ScaleOps enhances the functionality of cluster autoscalers, ensuring they operate efficiently and dynamically adjust cluster capacity based on workload requirements. Its key features include dynamic resource allocation, intelligent scaling, policy detection, auto-healing, and zero-downtime optimization.

Comparative Analysis

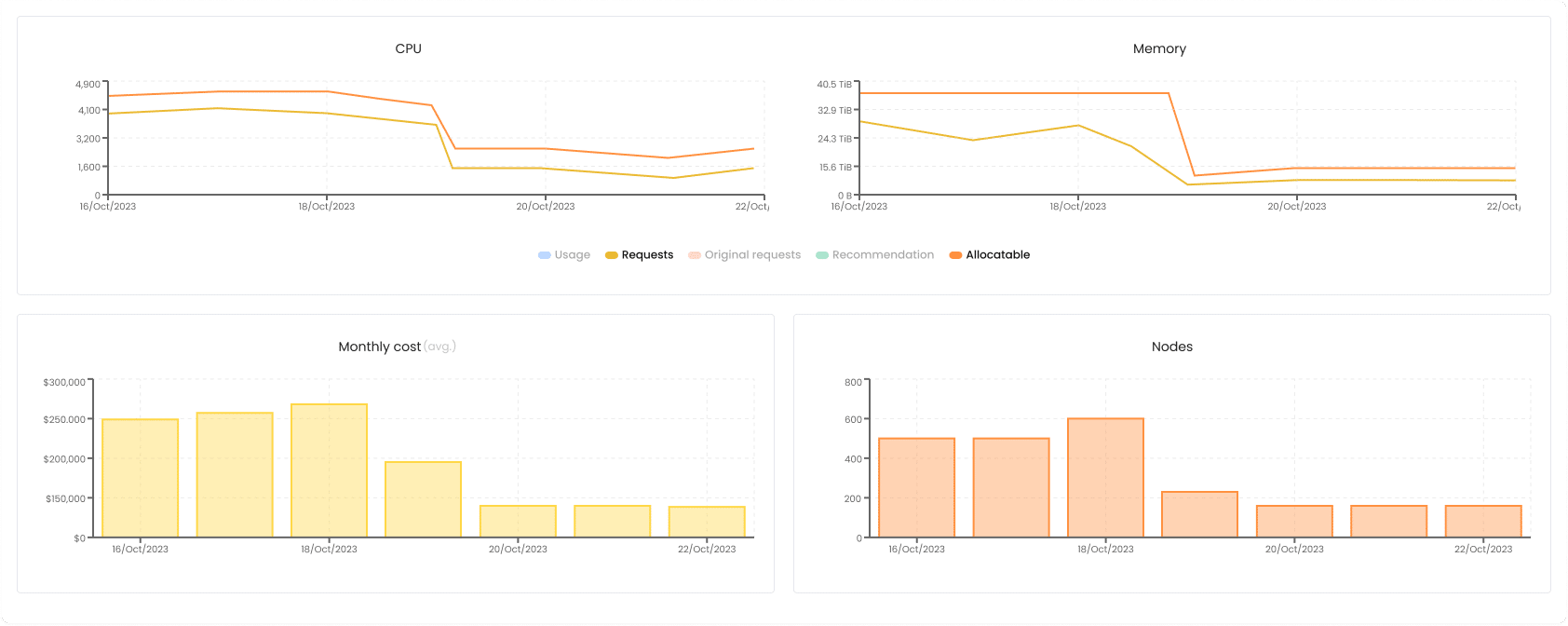

- Cost reduction: By integrating with and enhancing the functionality of all autoscaling tools within Kubernetes, ScaleOps ensures efficient resource allocation, reducing the risk of over-provisioning and unnecessary infrastructure costs. Furthermore, its streamlined management capabilities minimize operational overhead, leading to substantial cost savings while maintaining high-performance levels.

- Performance: ScaleOps optimizes application responsiveness, latency, and user experience by harnessing the capabilities of Dynamic Scaling in dynamic resource allocation and scaling.

- Scalability: ScaleOps leads in scalability, leveraging its integrated approach to combine the capabilities of Dynamic Scaling seamlessly. This integration ensures efficient scaling across varying workload demands, enabling organizations to adjust resource allocation and meet changing requirements dynamically.

- Flexibility: ScaleOps provides unparalleled flexibility by adapting to different workload types and resource requirements through its integration with Dynamic Scaling. This comprehensive solution allows organizations to tailor scaling strategies to their specific application architectures and business needs, ensuring optimal performance and resource utilization.

- Zero onboarding time: ScaleOps automatically identifies the workloads needed and attaches them to the best scaling policy, so teams don’t need to spend time learning and tailoring their own policy. ScaleOps does it out of the box.

Conclusion

ScaleOps stands out as a comprehensive solution for Kubernetes resource management, integrating seamlessly with HPA, VPA, KEDA, and Cluster Autoscaler to optimize performance, reduce costs and free the engineers from ongoing repetitive manual configurations. Organizations can leverage ScaleOps’ capabilities to achieve efficient resource utilization and ensure optimal application performance in dynamic environments.

If you’re eager to automate Kubernetes resource management and explore ScaleOps more deeply, Sign up for a Free Trial and see immediate value.

Happy optimizing!