As organizations increasingly adopt Kubernetes for container orchestration, the need for efficient resource management becomes paramount. Automatic pod rightsizing is a key aspect of this, ensuring optimal resource allocation and cost efficiency. This blog post compares Kubernetes Vertical Pod Autoscaler (VPA) and the ScaleOps platform, highlighting why ScaleOps stands out as the superior choice for automatic pod rightsizing.

1. Zero Downtime

VPA: VPA adjusts resource requests for pods, but it typically requires restarting the pods to apply the new configurations. This can lead to downtime, particularly for single-replica workloads, impacting the application’s availability.

ScaleOps: ScaleOps ensures zero downtime for single-replica workloads by using a smart rollout strategy. It creates a new pod before replacing the old one, allowing for seamless transitions without any service interruptions.

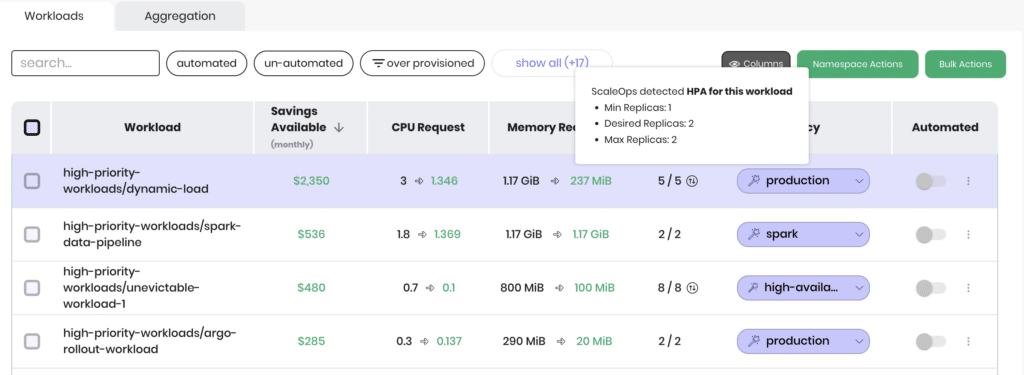

2. HPA Integration

VPA: VPA primarily focuses on vertical scaling based on historical usage and recommendations, but it does not inherently integrate with Horizontal Pod Autoscaler (HPA) for real-time scaling based on CPU and memory metrics.

ScaleOps: ScaleOps seamlessly integrates with HPA, supporting CPU and memory-based metrics without user intervention. This enhanced integration allows for more dynamic and effective scaling capabilities.

ScaleOps seamlessly integrates with HPA and Keda-based workloads

3. Auto Healing

VPA: VPA does not include built-in mechanisms for auto-healing based on real-time events. It focuses on adjusting resource requests and limits based on historical data.

ScaleOps: ScaleOps monitors API-server events like OOMs and liveness issues to take immediate corrective action. This ensures optimal performance by addressing issues as they arise, enhancing the reliability of your workloads.

4. Fast Response Time

VPA: VPA operates based on historical usage patterns, which can result in slower response times to sudden changes in workload demands. This might lead to resource shortages during unexpected usage spikes.

ScaleOps: ScaleOps continuously adapts to changing usage patterns with a fast-reaction mechanism. It monitors live traffic and node state, taking immediate action to adjust resource requests during sudden usage spikes, ensuring consistent performance.

5. Supported Workloads

VPA: VPA works well with workloads managed by native Kubernetes controllers but may require additional configuration for custom workloads or those not natively supported.

ScaleOps: ScaleOps automatically identifies a wide range of workloads, including custom workloads. It optimizes pods not owned by native Kubernetes controllers with out-of-the-box tailored policies, providing a more comprehensive solution.

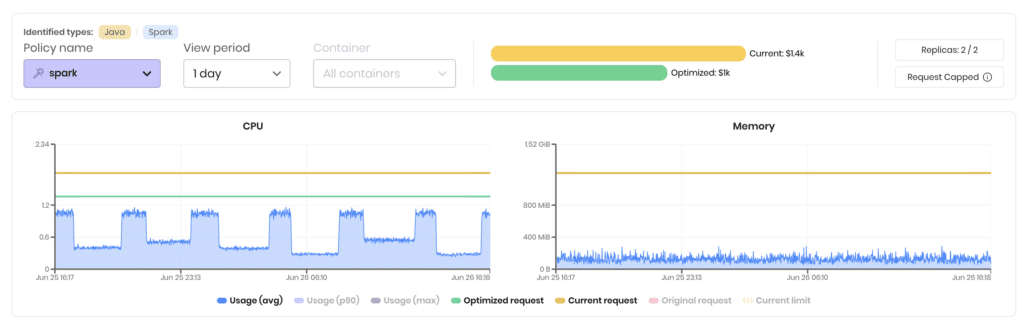

ScaleOps’s built-in support for Spark workload types

6. Sidecar Support

VPA: VPA may struggle with sidecar containers, as it treats them the same as primary containers, potentially leading to race conditions and conflicts during scaling.

ScaleOps: ScaleOps fully supports sidecar containers without any race condition issues or conflicts. This ensures that both primary and sidecar containers are managed effectively, maintaining application stability.

7. Active Bin Packing

VPA: VPA does not focus on active bin-packing of pods with PDB constraints or annotations preventing optimization, which can lead to inefficient resource utilization and higher costs.

ScaleOps: ScaleOps actively bin-packs these unevictable pods, improving resource utilization and cost savings. This proactive approach helps in maintaining an optimal balance of resources across the cluster.

8. Policy-Based Management

VPA: VPA offers basic configuration options but lacks advanced policy-based management for specific workload requirements, limiting customization.

ScaleOps: ScaleOps allows the creation of custom policies for specific workloads, accommodating diverse requirements such as percentiles, history windows, and other advanced parameters. This flexibility ensures that unique workload needs are met efficiently.

Conclusion

While Kubernetes VPA provides a robust solution for vertical pod autoscaling, ScaleOps offers a more comprehensive and dynamic approach to automatic pod rightsizing. With features like zero downtime, seamless HPA integration, auto healing, fast response times, broad workload support, sidecar support, active bin-packing, and policy-based management, ScaleOps stands out as the superior choice.

Ready to optimize your Kubernetes workloads effortlessly? Try ScaleOps today and experience the benefits firsthand.