The Kubernetes Cluster Autoscaler is a powerful tool designed to manage the scaling of nodes in a cluster, ensuring that workloads have the resources they need while optimizing costs. However, certain pod configurations can hinder the autoscaler’s ability to bin pack efficiently, leading to resource waste. Let’s delve into the specific challenges posed by Pods with Pod Disruption Budgets (PDBs) that prevent eviction, Pods marked as safe-to-evict “false”, and those with numerous anti-affinity constraints.

Pods with PDBs that Do Not Allow Eviction

Pod Disruption Budgets (PDBs) are a critical mechanism in Kubernetes for ensuring the availability of key services during voluntary disruptions, such as maintenance or scaling events. PDBs specify the minimum number of replicas that must remain available during such disruptions. While they are essential for maintaining service stability, they can pose a challenge for the Cluster Autoscaler when they do not allow the eviction of any pods.

When no pods can be evicted, the Cluster Autoscaler is restricted in its ability to consolidate workloads onto fewer nodes. This leads to scenarios where nodes remain underutilized because the autoscaler cannot move pods to free up entire nodes for scaling down. Consequently, resource waste increases as the cluster retains more nodes than necessary to handle the current workload.

apiVersion: policy/v1

kind: PodDisruptionBudget

metadata:

name: example-pdb

spec:

minAvailable: 100%

selector:

matchLabels:

app: example-app

An example of a PDB that ensures that 100% of pods should be available

Pods with restrictive annotations

The annotations cluster-autoscaler.kubernetes.io/safe-to-evict and karpenter.sh/do-not-disrupt are used to indicate that a pod should not be evicted. These annotations are typically used for critical system pods or stateful applications that require stability and consistent storage.

While these annotations are crucial for certain workloads, they can significantly hinder the autoscaler’s efficiency. Similar to the issue with PDBs, when too many pods are marked as non-evictable, the autoscaler has fewer opportunities to optimize resource usage. This can lead to fragmented node utilization, where nodes are not fully utilized but cannot be scaled down due to the presence of non-evictable pods, resulting in increased operational costs.

apiVersion: apps/v1

kind: Deployment

metadata:

name: example-deployment

labels:

app: example-app

spec:

replicas: 3

selector:

matchLabels:

app: example-app

template:

metadata:

labels:

app: example-app

annotations:

cluster-autoscaler.kubernetes.io/safe-to-evict: "false"

spec:

containers:

- name: example-container

image: nginx:latest

ports:

- containerPort: 80

An example of a pod that will not be evicted due to the safe-to-evict annotation

Pods with Many Anti-Affinity Constraints

Pod anti-affinity rules are used to specify that certain pods should not be scheduled on the same nodes, ensuring that workloads are spread out for reliability and fault tolerance. However, extensive use of anti-affinity constraints can complicate the scheduling process and hinder efficient bin packing.

When numerous pods have anti-affinity rules, the Kubernetes scheduler faces a complex puzzle. The scheduler must place pods in a manner that respects these rules while also trying to fill nodes to their capacity. This often results in nodes that are not optimally packed, as the scheduler must leave space to accommodate the anti-affinity constraints. Consequently, the Cluster Autoscaler finds it challenging to free up entire nodes for scaling down, leading to increased resource waste.

Potential Solutions to Improve Bin Packing Efficiency

Addressing the challenges posed by these pod configurations requires a multi-faceted approach. Here are some potential solutions:

- Review and Adjust PDBs: Regularly review PDB configurations to ensure they are set appropriately. Where possible, adjust the minimum available replicas to allow some flexibility for the autoscaler to evict and reschedule pods.

- Use

safe-to-evictSparingly: Apply thesafe-to-evictannotation judiciously. Only critical pods that truly cannot be interrupted should have this annotation. Evaluate whether some stateful or critical pods can tolerate eviction during scaling events. - Optimize Anti-Affinity Rules: Reevaluate the necessity and scope of anti-affinity rules. Where possible, reduce the complexity of these rules to provide more scheduling flexibility. Consider using soft anti-affinity rules (preferredDuringSchedulingIgnoredDuringExecution) instead of hard rules (requiredDuringSchedulingIgnoredDuringExecution) to give the scheduler more leeway.

- Leverage Node Affinity and Taints/Tolerations: Complement anti-affinity constraints with node affinity and taints/tolerations to guide pod placement more effectively. This can help balance the load across nodes while respecting workload requirements.

- Monitor and Analyze Resource Usage: Continuously monitor resource usage and pod placement to identify inefficiencies. Use tools and dashboards to visualize node utilization and adjust configurations as needed to optimize bin packing.

By addressing these challenges and implementing best practices, Kubernetes clusters can achieve more efficient resource utilization, reducing waste and optimizing costs.

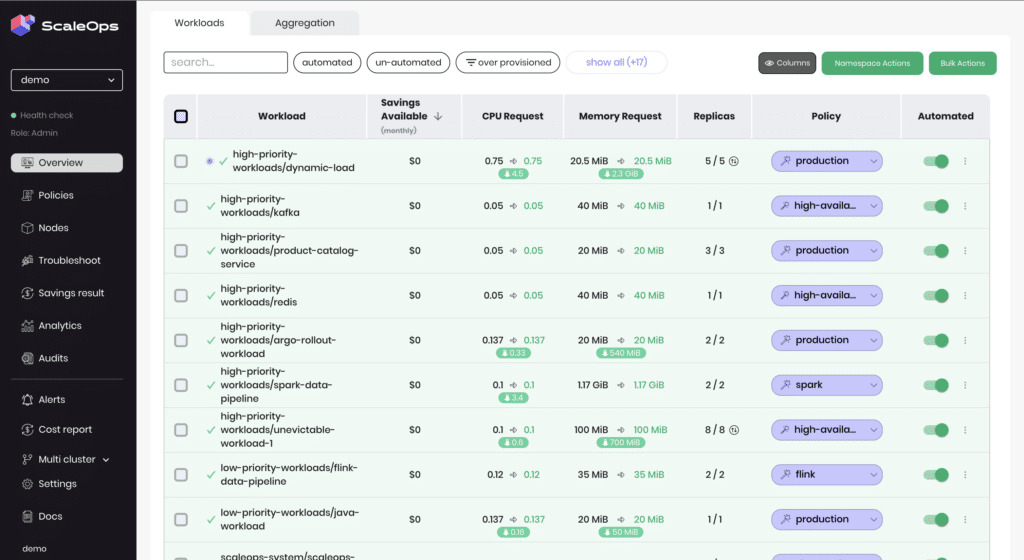

ScaleOps smart pod optimization including any workload type including out-of-the-box support for un-evictable pods

Conclusion

Efficient pod scheduling and node utilization are crucial for maintaining a cost-effective and high-performing Kubernetes environment. While PDBs, non-evictable pods, and anti-affinity constraints serve important purposes, they can complicate the bin packing process for the Cluster Autoscaler. However, with ScaleOps, these challenges are addressed automatically and seamlessly. ScaleOps dynamically optimizes pod placement and ensures that your cluster resources are used efficiently without manual intervention.

Ready to optimize your Kubernetes cluster for maximum efficiency? Try ScaleOps today and experience streamlined management and scaling. Visit ScaleOps to get started!