When managing large-scale Kubernetes clusters, efficient resource utilization is key to maintaining application performance while controlling costs. But certain workloads, deemed “unevictable,” can hinder this balance. These pods—restricted by Pod Disruption Budgets (PDBs), safe-to-evict annotations, or their role in core Kubernetes operations—are anchored to nodes, preventing the autoscaler from adjusting resources effectively. The result? Underutilized nodes that drive up costs and compromise scalability. In this blog post, we dive into how unevictable workloads challenge Kubernetes autoscaling and how ScaleOps’ optimized pod placement capabilities bring new efficiency to clusters through intelligent automation.

Blockers for Efficient Autoscaling with ScaleOps

Kubernetes clusters thrive on scaling resources dynamically to meet application demands. However, this balance can be disrupted by unevictable workloads—pods that the autoscaler cannot move or remove due to restrictions like Pod Disruption Budgets (PDBs), safe-to-evict annotations, or critical kube-system pods. These unevictable pods prevent node scaling and lead to significant resource waste as nodes remain underutilized.

In this blog post, we will explore how unevictable workloads impact Kubernetes autoscaling and how ScaleOps can help alleviate these issues through intelligent automation and optimized pod placement.

What are unevictable workloads?

The issue of unevictable pods arises due to several Kubernetes configurations designed to ensure availability and stability but at the cost of flexibility:

- Pod Disruption Budgets (PDBs): These ensure high availability of services by setting the minimum number of pod replicas that must remain running during a disruption. While essential for uptime, they can make pods unevictable during node scaling.

- Safe-to-Evict Annotations: Certain pods, such as those running critical system services, may carry a safe-to-evict: false annotation. This prevents them from being evicted during cluster operations, limiting the autoscaler’s ability to move workloads efficiently.

- Naked Pods: naked pods refer to pods that are created directly, without being managed by a higher-level controller, such as a Deployment, ReplicaSet, or StatefulSet. The Cluster Autoscaler does not evict naked pods, so a node running a naked pod will not be scaled down and bin packed.

Together, these factors make node scaling and bin packing more difficult, leaving nodes with underutilized resources that the cluster autoscaler cannot reclaim.

Enter Pod Placement

ScaleOps Pod Placement capabilities provide a smart approach to managing unevictable workloads without compromising the stability of the Kubernetes cluster. Here’s how:

- Automated Pod Placement Optimization: ScaleOps leverages advanced scheduling algorithms that analyze the PDB configurations, pod ownership, and safe-to-evict annotations, identifying opportunities to better schedule pods without violating these constraints. This ensures pods are placed efficiently, overcoming challenges where traditional autoscaling would struggle.

- Advanced Bin Packing for Unevictable Pods: ScaleOps Pod Placement capabilities helps optimize pod distribution across nodes, enhancing and optimizing the ability of any cluster autoscaler (including Karpenter) to efficiently bi-pack the cluster.

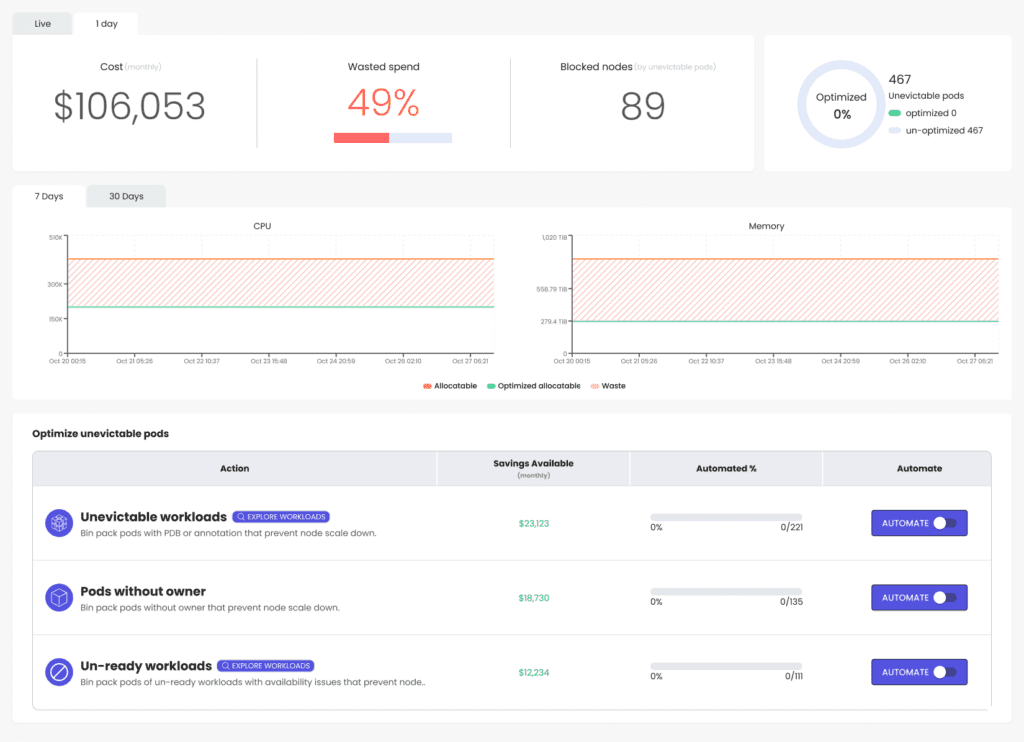

Visibility and Automation: Through intuitive dashboards, ScaleOps provides visibility into which workloads are unevictable and offers actionable insights to improve scheduling and scaling efficiency.

Case Study

Consider a scenario where a Kubernetes cluster is running a mission-critical application with a strict PDB set to allow zero pod disruptions during scaling events. Despite low resource utilization, the autoscaler cannot scale down nodes, leaving them underused and driving up operational costs. After implementing ScaleOps, the platform identifies that these workloads are blocking the underutilized nodes from scaling down due to PDB constraints. By automating these workloads, the ScaleOps platform now adjusts and optimizes pod placements, ScaleOps reduced the number of active nodes by 20% solely by automating the specific unevictable workload, significantly improving resource efficiency while maintaining service availability.

Conclusion

Unevictable workloads, such as pods with PDBs or safe-to-evict restrictions, can hamper the autoscaler’s ability to optimize Kubernetes resources, leading to wasted computing resources and higher costs. With ScaleOps, DevOps teams can maintain high availability while improving the efficiency of their clusters. ScaleOps dynamically adjusts pod placement and optimizes resource utilization, ensuring Kubernetes clusters run smoothly even with restrictive workloads.Unevictable workloads no longer need to be a roadblock—ScaleOps transforms how you manage Kubernetes resources, boosting both performance and cost efficiency. Ready to optimize your cluster? Try ScaleOps today to experience seamless autoscaling and intelligent resource management.