What is Kubernetes Cost Optimization?

Kubernetes cost optimization is the practice of reducing unnecessary cloud spend by efficiently managing compute, storage, and network resources across Kubernetes clusters. This often includes real-time monitoring of resource usage, workload-aware scaling, and intelligent scheduling. As environments scale, even minor inefficiencies can cascade into substantial financial waste, making optimization not just a financial concern but an operational imperative.

Why is Kubernetes Cost Optimization Important?

Kubernetes delivers powerful orchestration capabilities for running distributed applications, but its abstraction layers can obscure the cost implications of infrastructure decisions. Without active cost governance, organizations risk underutilized nodes, over-provisioned workloads, and a lack of insight into resource consumption. These inefficiencies translate directly into inflated cloud bills and degraded performance.

Potential Risks of Unchecked Kubernetes Clusters

Unchecked Kubernetes clusters can lead to unnecessary expenses and performance issues:

- Financial Waste: Occurs when paying for underutilized resources, leading to unnecessary expenses.

- Performance Bottlenecks: Causes degraded services and reduced operational performance.

Key Benefits of Kubernetes Cost Optimization

Kubernetes cost optimization enables businesses to achieve greater efficiency and performance:

- Cost Efficiency: Achieved by minimizing unnecessary resource usage and reducing overhead.

- Optimal Performance: Ensured by maintaining smooth and consistent application operations.

Goals of Cost Optimization Beyond Reducing Expenses

The goal of Kubernetes cost optimization isn’t just about cutting costs, it also focuses on using resources effectively and aligning with business needs:

- Maximizing Resource Utilization: Ensuring every resource is fully leveraged for optimal efficiency.

- Infrastructure Alignment: Aligning infrastructure with business needs, ensuring resources scale effectively with demand.

Kubernetes Cost Optimization Best Practices

Kubernetes cost optimization is essential for balancing performance and minimizing unnecessary expenses. By implementing best practices, organizations can ensure that resources are used efficiently while maintaining optimal application performance. Below are key strategies to help manage costs effectively in a Kubernetes environment:

Right-Size Your Kubernetes Cluster

A common mistake is to over-provision node resources when initially setting up a cluster. Regularly reviewing and adjusting the number of nodes based on actual demand is essential for cost savings.

Kubernetes provides tools like the Cluster Autoscaler, which adds and removes nodes automatically based on the resource usage in the cluster. For example, you can configure the Cluster Autoscaler to add or remove nodes dynamically depending on the resource demands in your workloads.

Cost Monitoring

Monitoring your Kubernetes environment is the first and most critical step in cost optimization. Without proper monitoring, it’s nearly impossible to know how resources are being consumed or where costs are accumulating.

Resource Limits

Kubernetes allows you to set resource limits on CPU and memory for individual containers. By doing this, you can prevent a single pod from consuming more resources than it should, thereby limiting waste.

Intelligent Automation with Context-Aware Optimization

Not all Kubernetes workloads are the same. Batch jobs, stateless APIs, and real-time streaming services each exhibit different usage patterns and performance requirements. Applying the same scaling or resource provisioning logic to every workload can lead to inefficiencies or performance degradation. Context-aware optimization addresses this by tailoring resource management to the unique characteristics of each workload.

Modern platforms use historical and real-time metrics to dynamically adjust pod resources, apply policy-based scaling tailored to specific workload types, and make non-disruptive changes during runtime. In production environments, where uptime and performance are critical, context-aware automation ensures that workloads are neither over-provisioned nor under-provisioned, delivering cost savings without sacrificing reliability.

Autoscaling

Autoscaling helps adjust resources based on demand, and Kubernetes offers both horizontal and vertical autoscaling. Horizontal Pod Autoscaler (HPA) automatically scales the number of pod replicas based on metrics like CPU or memory usage. Vertical Pod Autoscaler (VPA) adjusts the CPU and memory requests/limits for containers based on their usage history. It is particularly useful for workloads where usage patterns fluctuate over time.

Discounted Resources

Cloud providers offer various discounted pricing options, such as reserved instances and spot instances. Reserved instances provide discounts in exchange for long-term commitments (usually one or three years), while spot instances are available at a fraction of the cost but may be interrupted. Both options can save significant amounts if used wisely.

For predictable workloads, using reserved instances is a cost-effective strategy, while spot instances are best suited for non-critical, fault-tolerant workloads.

Use Production-Grade Automation Tools

As Kubernetes environments grow in scale and complexity, manual rightsizing, scaling, and cleanup become unsustainable. In production settings, where resource demands shift rapidly and workloads continuously evolve, automation is essential. Production-grade optimization tools go beyond basic metrics and apply real-time intelligence to manage infrastructure proactively. These platforms automatically adjust pod CPU and memory requests, consolidate underutilized nodes through advanced bin-packing algorithms, enforce scaling policies based on actual workload behavior, and detect and remediate idle or orphaned resources.

Sleep Mode

In non-production environments, such as development or testing, workloads may only be needed during specific periods. You can implement sleep mode for these workloads, shutting them down during off-hours to save on costs. Cloud providers often offer automation options for this, or you can use Kubernetes Jobs or CronJobs to schedule the shutdown of unnecessary resources.

Cleanup Strategies

Kubernetes environments can accumulate unused or orphaned resources, such as Persistent Volume Claims (PVCs) or dangling images, over time. Regularly cleaning up these resources is crucial to controlling costs.

Cluster Sharing and Multi-Tenancy

In larger organizations, multiple teams may use the same Kubernetes cluster. Instead of creating separate clusters, consider enabling multi-tenancy within a single cluster. Kubernetes namespaces and role-based access control (RBAC) allow for isolated workloads while sharing the underlying infrastructure, helping reduce costs.

By isolating teams using namespaces and controlling access with RBAC policies, you can manage costs more effectively while maintaining security and autonomy for different teams.

Kubernetes Cost Optimization Challenges

Kubernetes environments introduce several cost optimization challenges due to their dynamic and complex infrastructure. Understanding these challenges is crucial for effectively managing resource usage and preventing unnecessary expenses:

Lack of Visibility

As Kubernetes clusters rapidly scale to hundreds or thousands of pods, visibility into resource usage and costs becomes fragmented. Native Kubernetes lacks built-in cost monitoring, often requiring additional tools like Prometheus, Grafana, or third-party platforms to achieve actionable insight.

Implication: Hard to track the exact resource consumption and cost, leading to blind spots and missed optimization opportunities.

Dynamic and Complex Infrastructure

The dynamic nature of Kubernetes makes real-time resource tracking difficult, even impossible.

Implication: Leads to frequent over- or under-provisioning, resulting in inefficient resource allocation and the possibility of performance and reliability issues.

Misaligned Incentives

Development teams are typically measured by feature velocity and time-to-market, while infrastructure or platform teams are responsible for efficiency and cost control. Without shared accountability or visibility, cost-conscious engineering decisions are often deprioritized.

Implication: Leads to unoptimized resource usage and friction between speed and sustainability, and application and platform teams.

Common Kubernetes Cost Optimization Pitfalls

When optimizing Kubernetes costs, organizations often encounter challenges that lead to inefficiencies. Avoiding these common pitfalls is key to achieving both cost savings and optimal performance.

- Over-Provisioning Resources: One of the most common pitfalls is over-provisioning resources, particularly CPU and memory. Over-provisioned clusters lead to higher compute costs and often leave resources idle, contributing to unnecessary expenses.

- Ignoring Storage Costs: Storage costs can easily spiral out of control if persistent volumes and backups are not carefully managed. It’s essential to continuously monitor the storage being used by your applications and to clean up unused volumes regularly.

- Neglecting Networking Costs: Networking costs, especially in cloud environments, can be overlooked. High levels of data transfer between services can lead to costly egress fees, especially when dealing with services outside the Kubernetes cluster.

What are Different Kubernetes Costs?

Understanding where costs are incurred in Kubernetes environments is the first step to cost optimization. Below are the main areas where expenses arise:

1. Compute Costs

The biggest cost in a Kubernetes cluster is usually from compute resources, like virtual machines or servers running worker nodes. In cloud environments, these costs vary based on instance type and size, such as EC2 instances in AWS. Without setting resource limits, Kubernetes won’t control pod resource consumption, often leading to over-allocation of CPU and memory, which increases compute costs.

2. Network Costs

Network costs arise from data transfers between services, clusters, and external networks. Kubernetes applications often involve microservices communicating and consuming bandwidth. Cloud providers charge for network traffic, especially egress traffic, which can quickly escalate in high-traffic environments. Frequent interactions with external APIs or services also add to outbound traffic costs. Understanding where network traffic originates and its volume is crucial to reduce network traffic costs in Kubernetes cluster.

3. Storage Costs

Storage costs refer to the costs associated with storing data in persistent volumes, object storage (like S3), or other storage solutions integrated with your Kubernetes clusters. In cloud environments, providers charge based on the size and type of storage provisioned, along with I/O operations. Orphaned persistent volumes (i.e., storage that’s no longer associated with active pods) can inflate costs if not properly managed.

Top 5 Kubernetes Cost Optimization Tools

With a wide variety of tools available for Kubernetes cost management, choosing the right one for your organization can be overwhelming. To simplify the process, we’ve compiled a list of the top five Kubernetes cost optimization tools based on their features, usability, and overall impact on automated resource management:

1. ScaleOps

ScaleOps is a fully automated Kubernetes resource optimization platform purpose-built for production environments. Unlike traditional monitoring tools or manual tuning workflows, ScaleOps continuously and intelligently adjusts resources in real time based on live workload behavior and infrastructure conditions. Its context-aware automation spans pods, nodes, and scaling policies, enabling Kubernetes environments to run lean, performant, and resilient without constant manual oversight.

Key Features:

- Automatic Pod Rightsizing: Dynamically adjusts pod requests and limits in real-time, optimizing workloads to prevent over- or under-provisioning without downtime.

- Node Optimization: Uses advanced bin-packing to consolidate nodes, reducing the number of nodes and optimizing resource allocation for improved performance and cost savings.

- Smart Scaling Policies: Provides out-of-the-box and customizable scaling policies tailored to different workload types, with automatic policy detection for faster setup.

- Unified Cost Monitoring: Offers visibility into resource costs across clusters, namespaces, and labels, helping teams track and optimize resource allocation expenses.

- Role-based Access Control (RBAC): Ensures secure, efficient operations by limiting access based on roles, improving security and operational efficiency.

- Full Automation, Built for Production: ScaleOps is built from the ground up to run in critical production environments. It supports strict SLAs, integrates into GitOps workflows, and respects Kubernetes-native controls like RBAC and namespace boundaries. The platform does not require invasive sidecars or data egress, making it suitable for regulated or restricted deployments.

These features focus on simplifying Kubernetes management and providing both automation and visibility into resource usage. ScaleOps also provides detailed cost reports that help teams understand where they’re overspending and how to remediate those issues. The tool excels at delivering quick, actionable recommendations that are easy to implement.

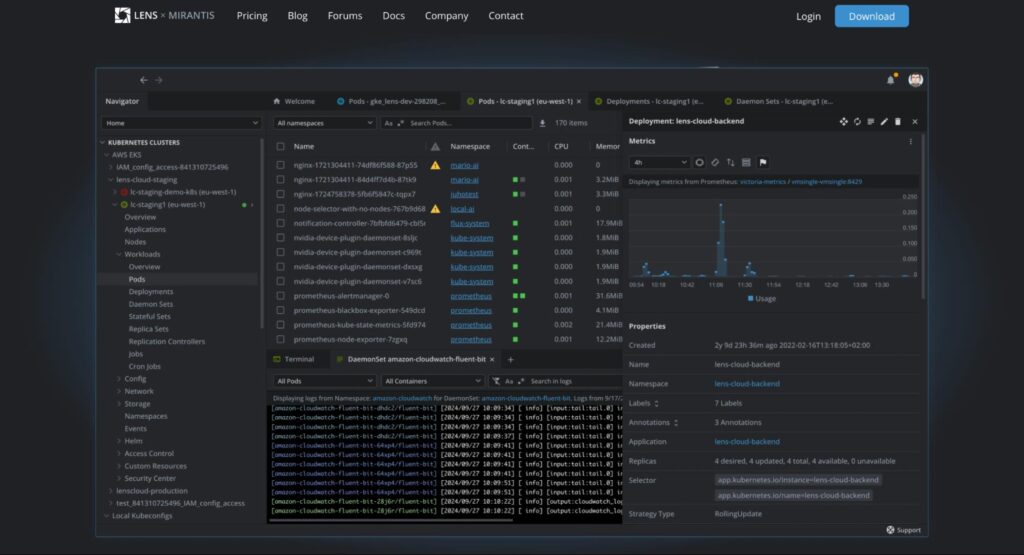

2. Lens

Lens is a popular open-source Kubernetes IDE that also provides powerful cost management and cluster visualization capabilities. While its primary function is to simplify Kubernetes cluster management, it also helps teams monitor and optimize resource usage, leading to better cost control.

Key Features:

- Unified Kubernetes Management: Provides a user-friendly graphical interface for managing Kubernetes clusters, helping users streamline cluster operations.

- Multi-Cluster Support: Easily manage multiple Kubernetes clusters in one view, switching between them without complicated setups.

- Real-Time Metrics & Monitoring: Offers real-time insights into cluster health and performance, displaying key metrics like CPU and memory usage for more effective monitoring.

- Built-In Terminal: Integrated terminal directly into the Lens interface for running kubectl commands, with cluster context auto-configured.

- Resource Overview and Control: Allows users to inspect and manage resources (pods, nodes, services, etc.) within a cluster, offering detailed insights and control.

- Extensions: Supports plugins and extensions to add extra functionalities tailored to specific needs, making it adaptable for various use cases.

These features aim to make Kubernetes management easier and more accessible, especially for developers and administrators working across complex environments.

3. Karpenter

Karpenter is an open-source tool that simplifies the process of dynamically scaling Kubernetes clusters. It automatically adjusts the number and size of nodes based on the real-time requirements of your workloads. This results in more efficient resource allocation, preventing over-provisioning and idle nodes, which translates to significant cost savings.

Key Features:

- Dynamic Provisioning: Karpenter automatically provisions new compute resources (nodes) in real-time to match the specific needs of Kubernetes workloads, improving resource efficiency.

- Cost Optimization: It optimizes the cost of cloud infrastructure by selecting the most cost-effective instance types based on workloads, minimizing over-provisioning.

- Flexible Scheduling: Karpenter allows for fine-grained scheduling and supports a wide range of instance types and sizes, including spot instances, for flexible and scalable Ncluster operations.

- Cluster Scalability: Karpenter enables seamless scaling of clusters, automatically adding or removing nodes based on workload demand.

- Fast Reaction to Workload Changes: It quickly responds to changes in workload, dynamically scaling the cluster up or down without manual intervention.

Karpenter is particularly valuable in scenarios where workload demands fluctuate significantly throughout the day. It allows you to scale up during peak times and scale down during off-hours, ensuring you don’t pay for unused resources.

4. Goldilocks

Goldilocks is an open-source tool designed to help users optimize Kubernetes resource requests and limits. It uses Vertical Pod Autoscaler (VPA) to provide recommendations on how to set proper resource limits for your workloads. By ensuring your resource requests are neither too high (leading to over-provisioning) nor too low (leading to throttling), Goldilocks helps maintain balance and reduces unnecessary costs.

Key Features:

- Resource Optimization: Goldilocks helps optimize Kubernetes resource requests and limits by providing insights into actual usage, enabling you to avoid over-provisioning or under-provisioning.

- Recommendation Engine: It generates recommendations for resource requests and limits based on usage data from the Vertical Pod Autoscaler (VPA), ensuring optimal settings for applications.

- Cost Efficiency: By providing resource tuning recommendations, Goldilocks helps reduce cloud infrastructure costs by preventing over-allocated resources.

- User-Friendly Dashboard: It includes a clear and intuitive interface to display metrics and recommendations, making resource management easier.

- Multi-Namespace Support: Goldilocks allows you to analyze and optimize resource settings across multiple namespaces within Kubernetes.

Goldilocks is ideal for teams that want a straightforward tool to optimize resource allocation and prevent over-provisioning, making it a popular choice for cost-conscious organizations.

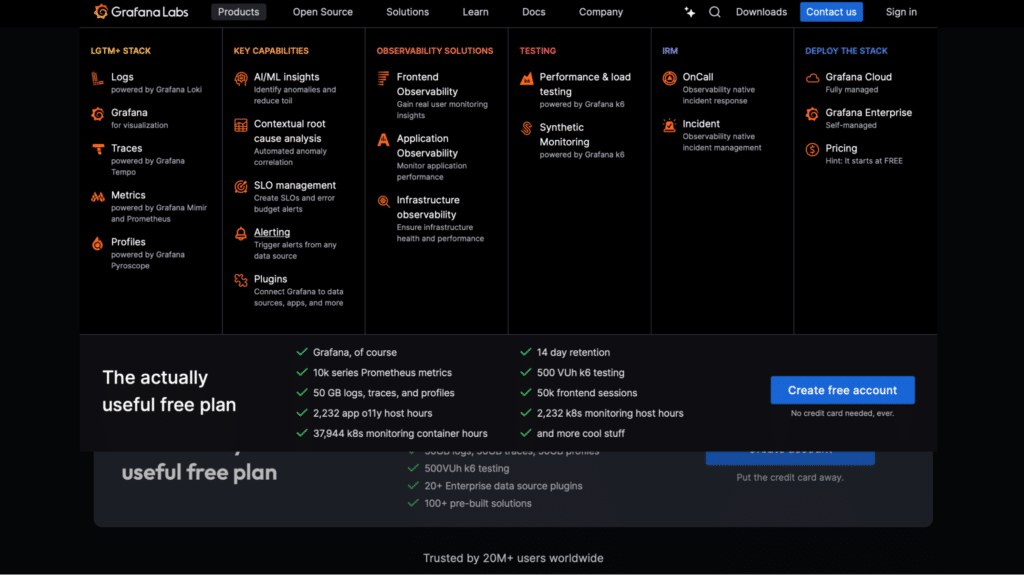

5. Grafana

Grafana is widely known for its powerful data visualization capabilities, often used in combination with Prometheus for monitoring Kubernetes clusters. While not solely a cost optimization tool, Grafana enables teams to build custom dashboards that track resource usage, enabling better cost visibility and management.

Key Features:

- Data Visualization: Grafana allows users to visualize complex data from multiple sources using customizable dashboards, charts, and graphs.

- Multi-Source Data Support: It integrates with a wide variety of data sources such as Prometheus, Graphite, Elasticsearch, InfluxDB, and many more, making it highly flexible for different environments.

- Alerting System: Built-in alerting capabilities let you set up alerts based on data thresholds, which can trigger notifications via channels like email, Slack, or PagerDuty.

- Dashboard Templates: Pre-built templates make it easy to create dashboards for common use cases, saving time and effort.

- Anomaly Detection and Analytics: Advanced analytics features include machine learning and statistical tools for detecting anomalies and analyzing trends in your data.

- User Access Control: Role-based access management ensures secure, customized access to dashboards and features.

- Extensible Plugins: A rich ecosystem of plugins allows you to extend Grafana’s functionality with additional data sources, visualizations, and panels.

With Grafana, you can visualize cost-related data alongside other performance metrics, offering a comprehensive view of cluster health and expenditure. This makes it a versatile tool for cost monitoring and decision-making.

How to Choose a Kubernetes Cost Optimization Tool

When choosing a Kubernetes cost optimization tool, it’s important to consider the following factors:

| Key Features | Description |

|---|---|

| Visibility | • Clear cost breakdown by namespace, deployment and pod • Real-time and historical data for insights into cost driver |

| Automation | • Automates resource rightsizing and anomaly alerts • Dynamic auto scaling to prevent over/under-provisioning |

| Integration | • Integrates with AWS, GCP, Azure, and CI/CD tools • For unified cost and performance views |

| Scalability | • Scales with workloads, from small to large clusters • Maintains high performance with actionable insights as infrastructure grows |

| Support and Updates | • Actively maintained and regularly updated • Strong community/vendor support for quick issue resolution and smooth compatibility with new Kubernetes versions |

Conclusion

Kubernetes cost optimization is a continuous process that requires diligent monitoring, proactive management, and the right tools. By following best practices like setting resource limits, implementing autoscaling, and leveraging multi-tenancy, organizations can significantly reduce Kubernetes costs while maintaining efficient operations.

In the evolving world of cloud-native applications, the ability to optimize costs without sacrificing performance is a critical skill for any organization. By taking a proactive approach to cost optimization, you can ensure that your Kubernetes environments are not only powerful but also cost-efficient, providing a balance between functionality and financial responsibility.